Custom links

https://itnext.io/custom-links-in-jaeger-ui-3479f72bb815

apiVersion: jaegertracing.io/v1

kind: Jaeger

metadata:

name: simplest

spec:

ui:

options:

dependencies:

menuEnabled: false

linkPatterns:

- type: "process"

key: "hostname"

url: https://console.cloud.google.com/kubernetes/pod/europe-west1-b/gkeClusterName/default/#{hostname}?project=gcpProjectName&container_summary_list_tablesize=10&tab=details&duration=PT1H&service_list_datatablesize=20

text: "Open GCP console with pods #{hostname} details"

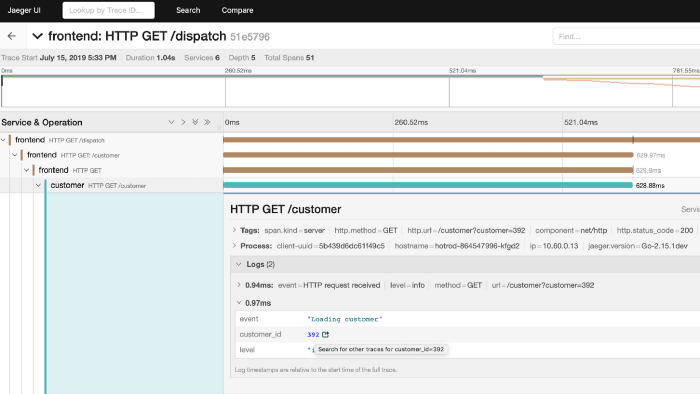

- type: "logs"

key: "customer_id"

url: /search?limit=20&lookback=1h&service=frontend&tags=%7B%22customer_id%22%3A%22#{customer_id}%22%7D

text: "Search for other traces for customer_id=#{customer_id}"

https://opentelemetry.io/docs/instrumentation/python/getting-started/

from opentelemetry import trace

from opentelemetry.exporter.jaeger.thrift import JaegerExporter

from opentelemetry.sdk.resources import SERVICE_NAME, Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

trace.set_tracer_provider(

TracerProvider(

resource=Resource.create({SERVICE_NAME: "my-helloworld-service"})

)

)

jaeger_exporter = JaegerExporter(

agent_host_name="localhost",

agent_port=6831,

)

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(jaeger_exporter)

)

tracer = trace.get_tracer(__name__)

with tracer.start_as_current_span("foo"):

with tracer.start_as_current_span("bar"):

with tracer.start_as_current_span("baz"):

print("Hello world from OpenTelemetry Python!")

https://opentelemetry-python.readthedocs.io/en/latest/

docker run --rm \

-p5775:5775/udp \

-p6831:6831/udp \

-p16686:16686 \

jaegertracing/all-in-one:latest

pip3 install opentelemetry-api

pip3 install opentelemetry-sdk

pip3 install opentelemetry-exporter-jaeger

pip3 install opentelemetry-instrumentation-flask

pip3 install opentelemetry-instrumentation-requests

from opentelemetry import trace

from opentelemetry.exporter.jaeger.thrift import JaegerExporter

from opentelemetry.sdk.resources import SERVICE_NAME, Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import (

BatchSpanProcessor,

ConsoleSpanExporter,

)

import time

import threading

import random

trace.set_tracer_provider(

TracerProvider(

resource=Resource.create({SERVICE_NAME: "my-AI-service"})

)

)

jaeger_exporter = JaegerExporter(

agent_host_name="localhost",

agent_port=6831,

)

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(jaeger_exporter)

)

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(ConsoleSpanExporter())

)

tracer = trace.get_tracer(__name__)

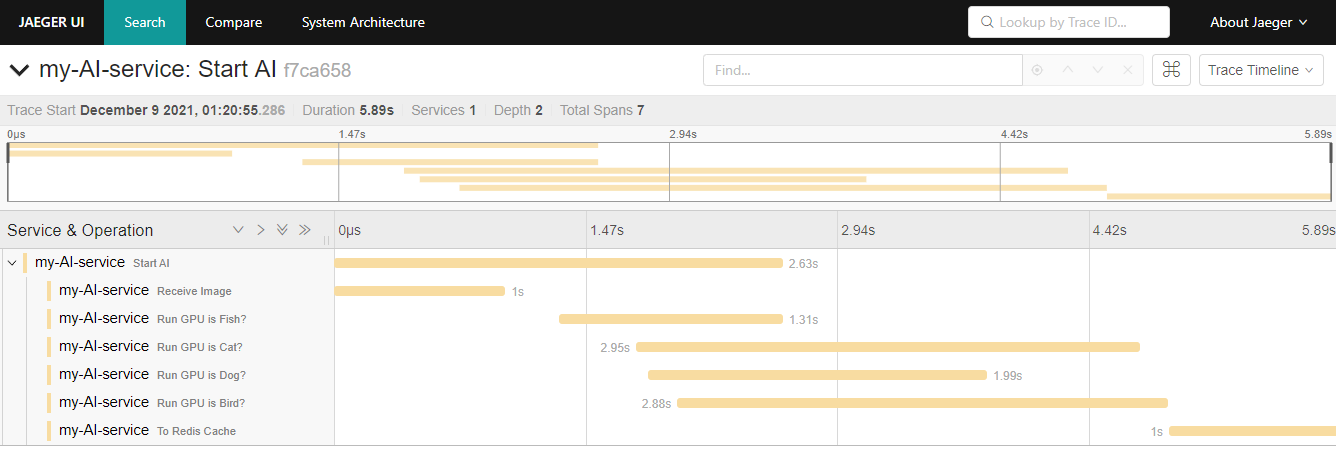

def runGPUMatch(match, span):

#with tracer.start_as_current_span(f"Run GPU {match}"):

#with tracer.start_as_current_span(f"Run GPU {match}", context=context):

time.sleep(random.uniform(0,1)+0.05)

with trace.use_span(span, end_on_exit=True):

with tracer.start_as_current_span(f"Run GPU is {match}?"):

time.sleep(random.uniform(1,3))

with tracer.start_as_current_span("Start AI") as span:

context = span.get_span_context()

print(context)

with tracer.start_as_current_span("Receive Image"):

time.sleep(1)

#span0 = tracer.start_span("Dog")

animals = ["Dog", "Cat", "Bird", "Fish"]

threads = [

threading.Thread(target=runGPUMatch, args=(animal, span), daemon=True)

for animal in animals

]

[x.start() for x in threads]

[x.join() for x in threads]

with tracer.start_as_current_span("To Redis Cache"): # Store cache

time.sleep(1)

#return "answer"

flask_example

# flask_example.py

import flask

import requests

import time

from opentelemetry import trace

from opentelemetry.exporter.jaeger.thrift import JaegerExporter

from opentelemetry.instrumentation.flask import FlaskInstrumentor

from opentelemetry.instrumentation.requests import RequestsInstrumentor

from opentelemetry.sdk.resources import SERVICE_NAME, Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import (

BatchSpanProcessor,

ConsoleSpanExporter,

)

trace.set_tracer_provider(

TracerProvider(

resource=Resource.create({SERVICE_NAME: "my-flask-service"})

)

)

#trace.set_tracer_provider(TracerProvider())

jaeger_exporter = JaegerExporter(

agent_host_name="localhost",

agent_port=6831,

)

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(jaeger_exporter)

)

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(ConsoleSpanExporter())

)

app = flask.Flask(__name__)

FlaskInstrumentor().instrument_app(app)

RequestsInstrumentor().instrument()

tracer = trace.get_tracer(__name__)

@app.route("/")

def hello():

with tracer.start_as_current_span("example-request"):

#rs = requests.get("http://localhost:8702/api/books")

rs = requests.get("http://quarkus2-lab:5000/")

print(rs.status_code)

return "hello"

@app.route("/2")

def r_2():

with tracer.start_as_current_span("heavy_process"):

time.sleep(2)

return "hello2"

@app.route("/3")

def hello3():

with tracer.start_as_current_span("example-request"):

#rs = requests.get("http://localhost:8702/api/books")

rs = requests.get("http://quarkus2-lab:5000/q2")

print(rs.status_code)

return "hello3"

app.run(host='0.0.0.0', debug=True, port=5000)

Frontend

Angular

https://github.com/jufab/opentelemetry-angular-interceptor