- Pre req

- Jmeter 5

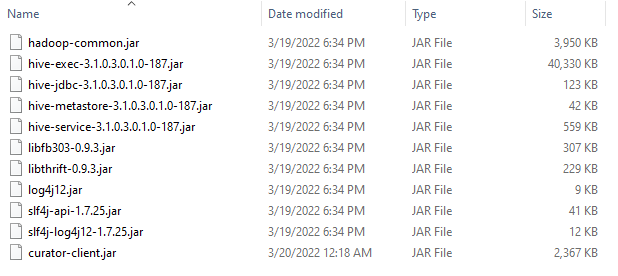

Copy Jars to apache-jmeter-5.4.3/lib/ext

[root@sandbox-hdp lib]# find / -name "hive-exec-3.1.0.3.0.1.0-187.jar"

/usr/hdp/3.0.1.0-187/atlas/hook/storm/atlas-storm-plugin-impl/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/oozie/libserver/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/oozie/share/lib/spark/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/oozie/share/lib/hive/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/oozie/share/lib/pig/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/oozie/oozie-server/webapps/oozie/WEB-INF/lib/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/ranger-admin/ews/webapp/WEB-INF/classes/ranger-plugins/hive/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/storm/contrib/storm-autocreds/hive-exec-3.1.0.3.0.1.0-187.jar

/usr/hdp/3.0.1.0-187/hive/lib/hive-exec-3.1.0.3.0.1.0-187.jar

/root/tosend/hive-exec-3.1.0.3.0.1.0-187.jar

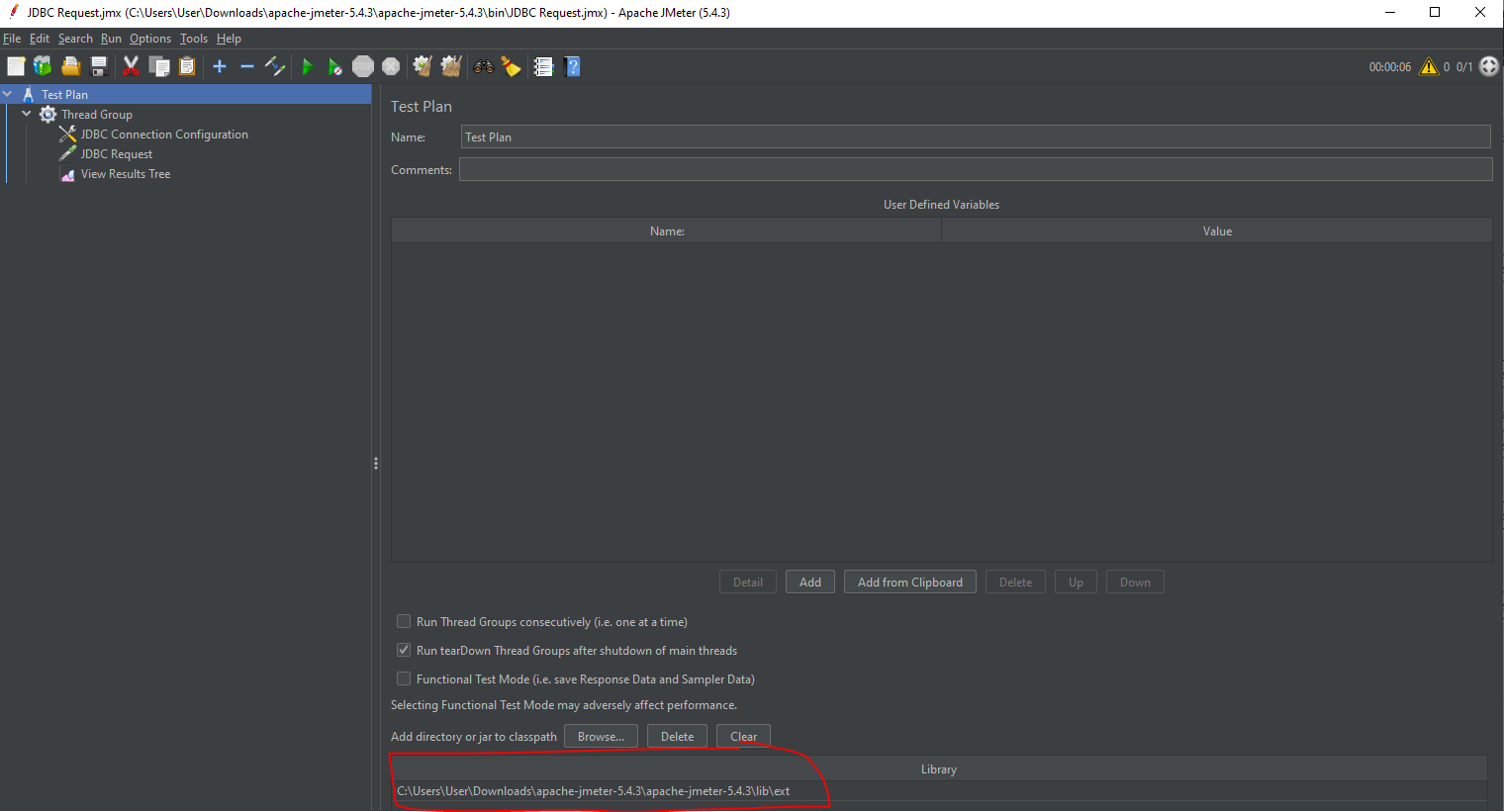

Add Class path

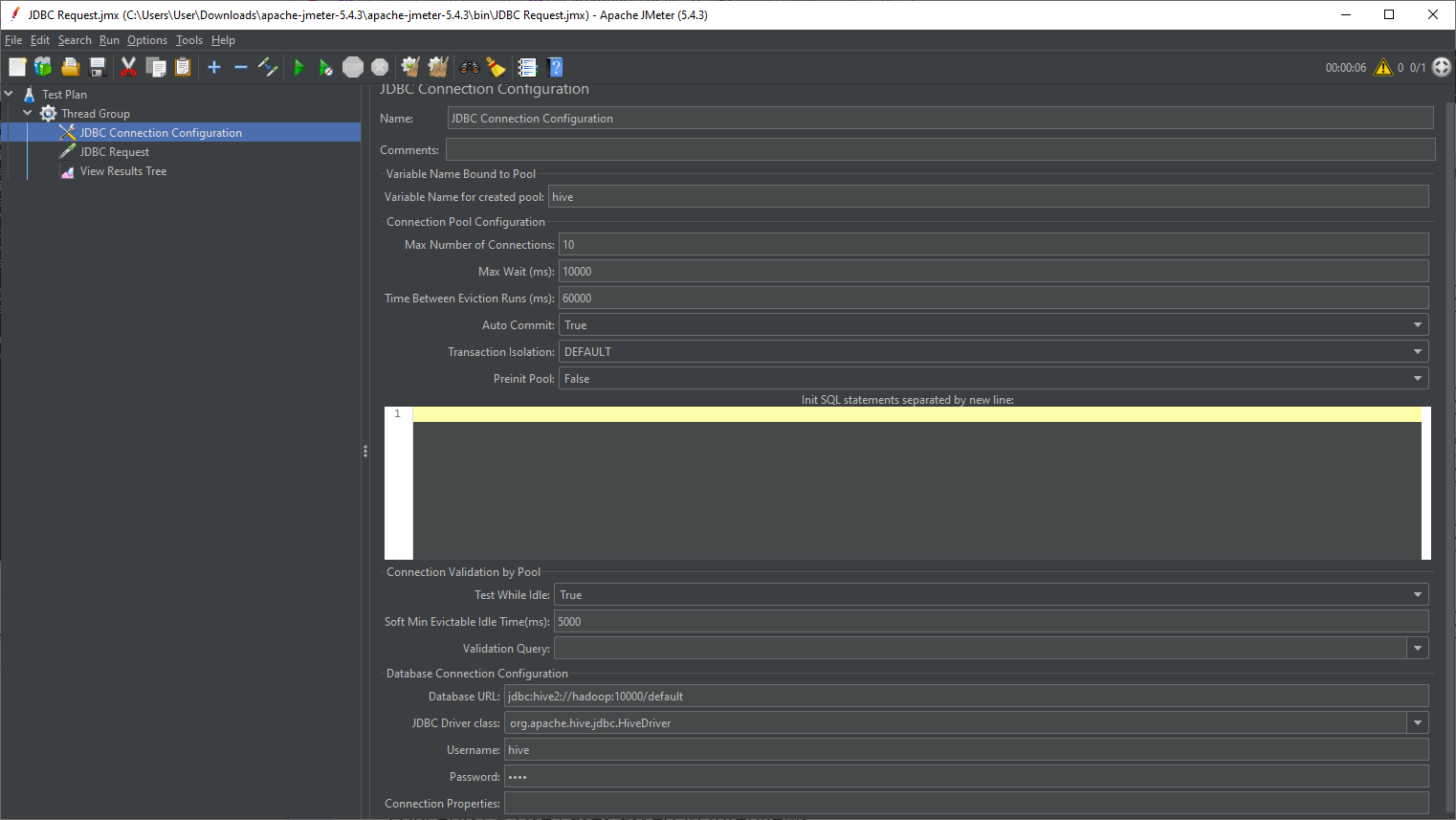

JDBC Connection Configuration

Database URL: jdbc:hive2://hadoop:10000/default JDBC Driver class: org.apache.hive.jdbc.HiveDriver

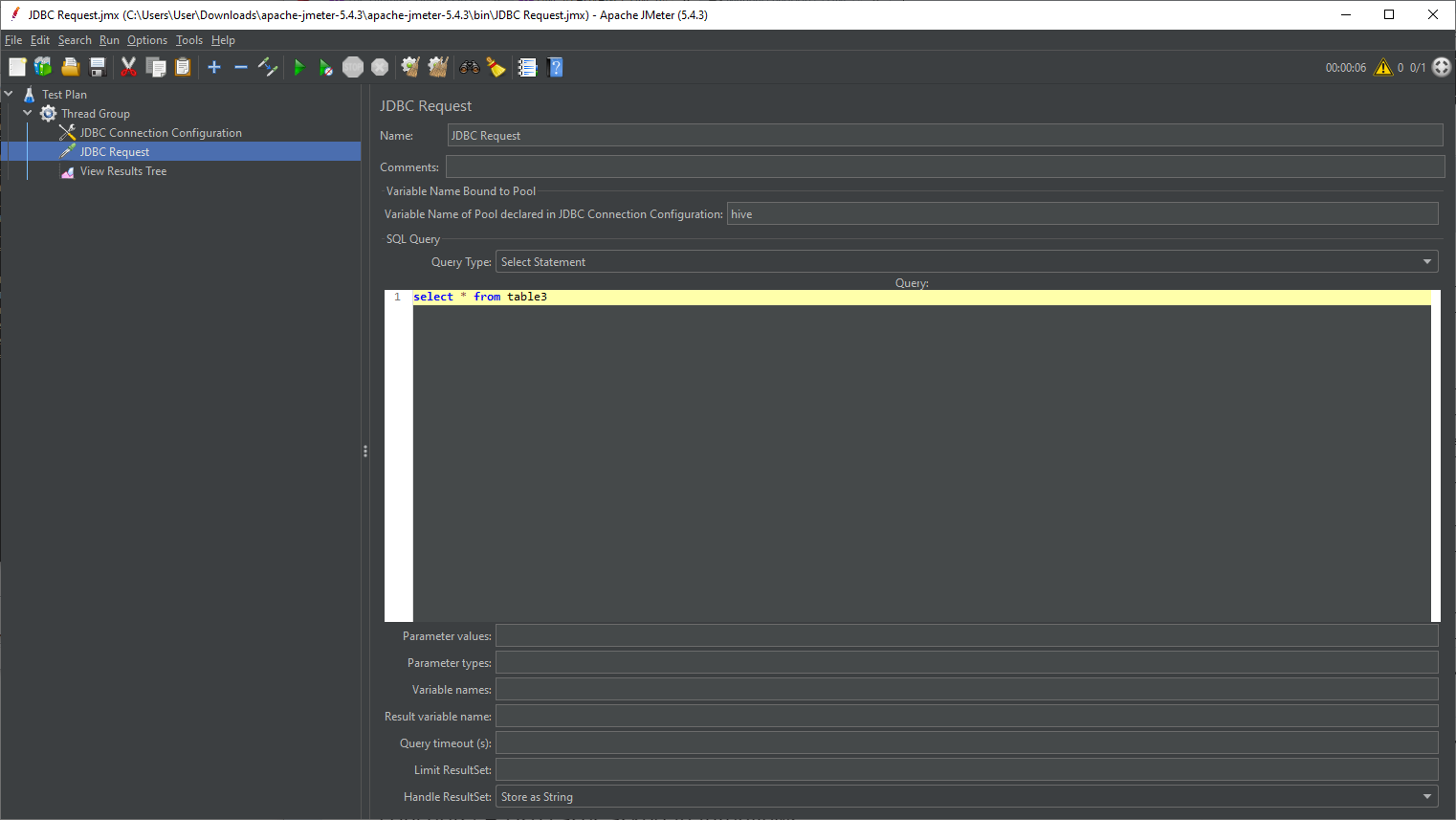

JDBC Request

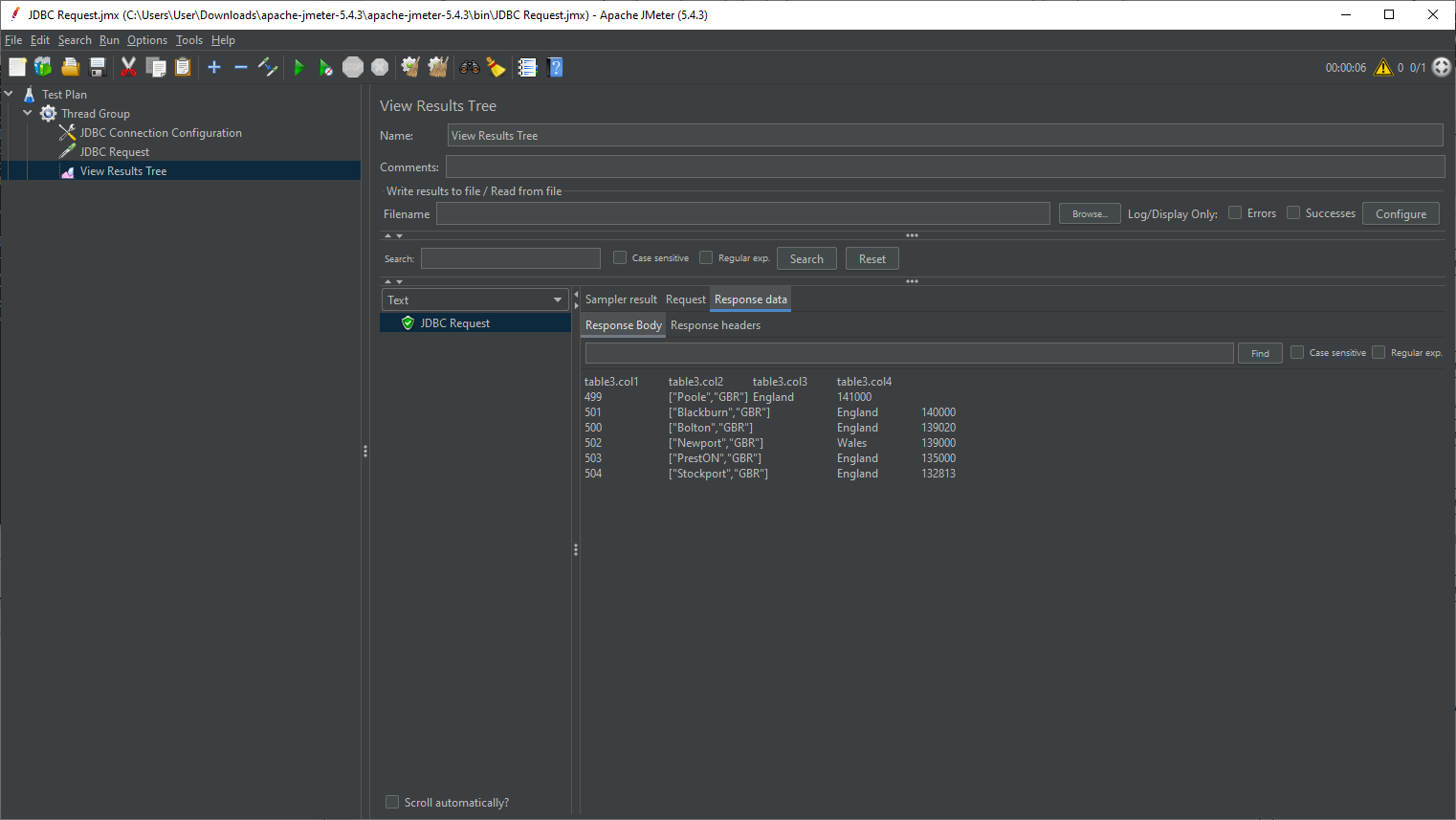

JDBC View Results Tree

Run Linux non GUI

https://blog.e-zest.com/how-to-run-jmeter-in-non-gui-mode/

jmeter -n -t hivetest.jmx -l testresult.jtl

# cat testresult.jtl

timeStamp,elapsed,label,responseCode,responseMessage,threadName,dataType,success,failureMessage,bytes,sentBytes,grpThreads,allThreads,URL,Latency,IdleTime,Connect

1647747738328,2269,JDBC Request,200,OK,Thread Group 1-1,text,true,,269,0,1,1,null,2207,0,1986

Hive Bench

https://github.com/hortonworks/hive-testbench

git clone https://github.com/hortonworks/hive-testbench.git

yum -y install java-11-openjdk java-11-openjdk-devel

yum -y groupinstall "Development tools"

./tpcds-build.sh

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 1:55.047s

[INFO] Finished at: Mon Mar 21 04:18:27 UTC 2022

[INFO] Final Memory: 21M/172M

[INFO] ------------------------------------------------------------------------

TPC-DS Data Generator built, you can now use tpcds-setup.sh to generate data.

[root@sandbox-hdp hive-testbench]# ./tpcds-setup.sh 2

ls: `/tmp/tpcds-generate/2': No such file or directory

Generating data at scale factor 2.

22/03/21 04:18:53 INFO client.RMProxy: Connecting to ResourceManager at sandbox-hdp.hortonworks.com/172.18.0.2:8050

22/03/21 04:18:54 INFO client.AHSProxy: Connecting to Application History server at sandbox-hdp.hortonworks.com/172.18.0.2:10200

22/03/21 04:18:54 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1647836125196_0002

22/03/21 04:18:54 INFO input.FileInputFormat: Total input files to process : 1

22/03/21 04:18:55 INFO mapreduce.JobSubmitter: number of splits:2

22/03/21 04:18:55 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1647836125196_0002

22/03/21 04:18:55 INFO mapreduce.JobSubmitter: Executing with tokens: []

22/03/21 04:18:55 INFO conf.Configuration: found resource resource-types.xml at file:/etc/hadoop/3.0.1.0-187/0/resource-types.xml

22/03/21 04:18:56 INFO impl.YarnClientImpl: Submitted application application_1647836125196_0002

22/03/21 04:18:56 INFO mapreduce.Job: The url to track the job: http://sandbox-hdp.hortonworks.com:8088/proxy/application_1647836125196_0002/

22/03/21 04:18:56 INFO mapreduce.Job: Running job: job_1647836125196_0002

22/03/21 04:28:02 INFO mapreduce.Job: Job job_1647836125196_0002 running in uber mode : false

22/03/21 04:28:02 INFO mapreduce.Job: map 0% reduce 0%

22/03/21 04:28:29 INFO mapreduce.Job: map 50% reduce 0%

22/03/21 04:31:02 INFO mapreduce.Job: map 100% reduce 0%

22/03/21 04:31:03 INFO mapreduce.Job: Job job_1647836125196_0002 completed successfully

22/03/21 04:31:03 INFO mapreduce.Job: Counters: 32

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=471352

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=425

HDFS: Number of bytes written=2281110713

HDFS: Number of read operations=93

HDFS: Number of large read operations=0

HDFS: Number of write operations=79

Job Counters

Launched map tasks=2

Other local map tasks=2

Total time spent by all maps in occupied slots (ms)=809604

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=202401

Total vcore-milliseconds taken by all map tasks=202401

Total megabyte-milliseconds taken by all map tasks=207258624

Map-Reduce Framework

Map input records=2

Map output records=0

Input split bytes=250

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=1454

CPU time spent (ms)=38000

Physical memory (bytes) snapshot=555761664

Virtual memory (bytes) snapshot=5728063488

Total committed heap usage (bytes)=351272960

Peak Map Physical memory (bytes)=289304576

Peak Map Virtual memory (bytes)=2881773568

File Input Format Counters

Bytes Read=175

File Output Format Counters

Bytes Written=0

TPC-DS text data generation complete.

Loading text data into external tables.

Optimizing table date_dim (1/24).

Optimizing table time_dim (2/24).

Optimizing table item (3/24).

Optimizing table customer (4/24).

Optimizing table customer_demographics (5/24).

Optimizing table household_demographics (6/24).

Optimizing table customer_address (7/24).

Optimizing table store (8/24).

Optimizing table promotion (9/24).

Optimizing table warehouse (10/24).

Optimizing table ship_mode (11/24).

Optimizing table reason (12/24).

Optimizing table income_band (13/24).

Optimizing table call_center (14/24).

Optimizing table web_page (15/24).

Optimizing table catalog_page (16/24).

Optimizing table web_site (17/24).

Optimizing table store_sales (18/24).

Optimizing table store_returns (19/24).

Optimizing table web_sales (20/24).

Optimizing table web_returns (21/24).

Optimizing table catalog_sales (22/24).

Optimizing table catalog_returns (23/24).

Optimizing table inventory (24/24).

Loading constraints

Data loaded into database tpcds_bin_partitioned_orc_2.

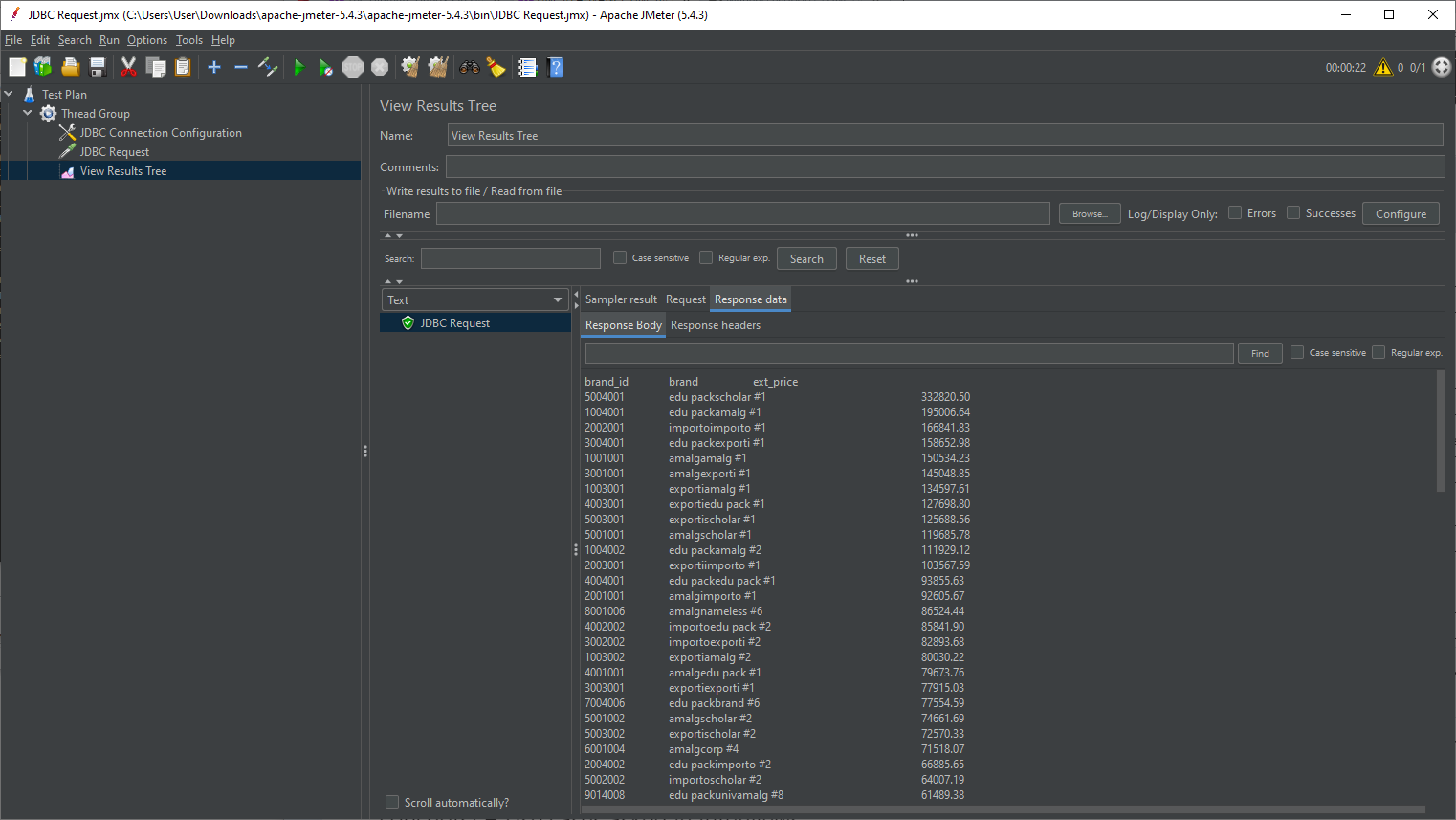

Query

hive -i testbench.settings

hive> use tpcds_bin_partitioned_orc_2;

hive> source query55.sql;

more

22/03/21 05:51:51 [main]: WARN conf.HiveConf: HiveConf of name hive.stats.fetch.partition.stats does not exist

22/03/21 05:51:51 [main]: WARN conf.HiveConf: HiveConf of name hive.heapsize does not exist

INFO : Compiling command(queryId=hive_20220321055152_3d36d3f3-e798-41a7-a86f-8b53c6368e54): select i_brand_id brand_id, i_brand brand,

sum(ss_ext_sales_price) ext_price

from date_dim, store_sales, item

where d_date_sk = ss_sold_date_sk

and ss_item_sk = i_item_sk

and i_manager_id=13

and d_moy=11

and d_year=1999

group by i_brand, i_brand_id

order by ext_price desc, i_brand_id

limit 100

INFO : No Stats for tpcds_bin_partitioned_orc_2@date_dim, Columns: d_moy, d_date_sk, d_year

INFO : No Stats for tpcds_bin_partitioned_orc_2@store_sales, Columns: ss_ext_sales_price, ss_item_sk

INFO : No Stats for tpcds_bin_partitioned_orc_2@item, Columns: i_manager_id, i_brand, i_brand_id, i_item_sk

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:brand_id, type:int, comment:null), FieldSchema(name:brand, type:char(50), comment:null), FieldSchema(name:ext_price, type:decimal(17,2), comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20220321055152_3d36d3f3-e798-41a7-a86f-8b53c6368e54); Time taken: 1.89 seconds

INFO : Executing command(queryId=hive_20220321055152_3d36d3f3-e798-41a7-a86f-8b53c6368e54): select i_brand_id brand_id, i_brand brand,

sum(ss_ext_sales_price) ext_price

from date_dim, store_sales, item

where d_date_sk = ss_sold_date_sk

and ss_item_sk = i_item_sk

and i_manager_id=13

and d_moy=11

and d_year=1999

group by i_brand, i_brand_id

order by ext_price desc, i_brand_id

limit 100

INFO : Query ID = hive_20220321055152_3d36d3f3-e798-41a7-a86f-8b53c6368e54

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Subscribed to counters: [] for queryId: hive_20220321055152_3d36d3f3-e798-41a7-a86f-8b53c6368e54

INFO : Tez session hasn't been created yet. Opening session

INFO : Dag name: select i_brand_id brand_id, i_brand b...100 (Stage-1)

INFO : Setting tez.task.scale.memory.reserve-fraction to 0.30000001192092896

INFO : Status: Running (Executing on YARN cluster with App id application_1647836125196_0027)

----------------------------------------------------------------------------------------------

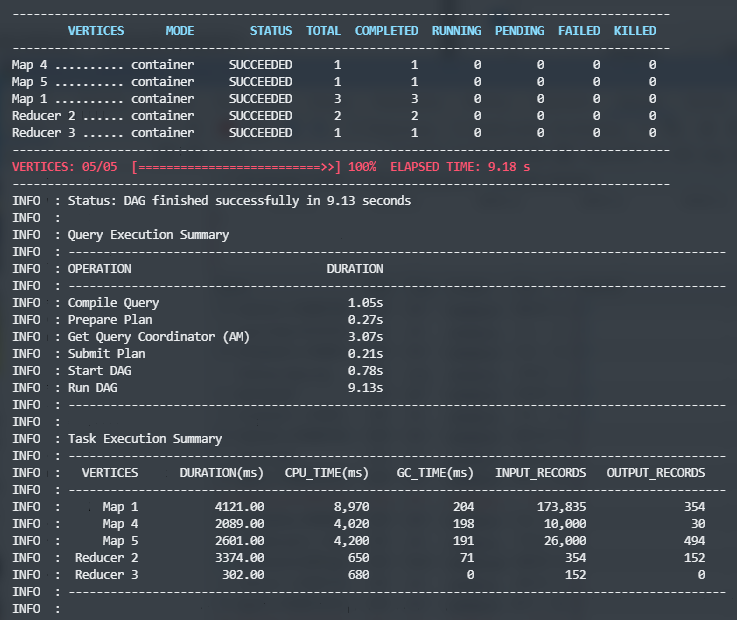

VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

----------------------------------------------------------------------------------------------

Map 4 .......... container SUCCEEDED 1 1 0 0 0 0

Map 5 .......... container SUCCEEDED 1 1 0 0 0 0

Map 1 .......... container SUCCEEDED 3 3 0 0 0 0

Reducer 2 ...... container SUCCEEDED 10 10 0 0 0 0

Reducer 3 ...... container SUCCEEDED 1 1 0 0 0 0

----------------------------------------------------------------------------------------------

VERTICES: 05/05 [==========================>>] 100% ELAPSED TIME: 9.26 s

----------------------------------------------------------------------------------------------

INFO : Status: DAG finished successfully in 9.21 seconds

INFO :

INFO : Query Execution Summary

INFO : ----------------------------------------------------------------------------------------------

INFO : OPERATION DURATION

INFO : ----------------------------------------------------------------------------------------------

INFO : Compile Query 1.89s

INFO : Prepare Plan 2.81s

INFO : Get Query Coordinator (AM) 0.00s

INFO : Submit Plan 0.30s

INFO : Start DAG 0.96s

INFO : Run DAG 9.21s

INFO : ----------------------------------------------------------------------------------------------

INFO :

INFO : Task Execution Summary

INFO : ----------------------------------------------------------------------------------------------

INFO : VERTICES DURATION(ms) CPU_TIME(ms) GC_TIME(ms) INPUT_RECORDS OUTPUT_RECORDS

INFO : ----------------------------------------------------------------------------------------------

INFO : Map 1 2658.00 5,710 230 173,835 504

INFO : Map 4 2665.00 3,440 225 26,000 494

INFO : Map 5 2117.00 3,270 106 73,049 30

INFO : Reducer 2 1552.00 2,270 300 353 566

INFO : Reducer 3 727.00 470 13 152 0

INFO : ----------------------------------------------------------------------------------------------

INFO :

INFO : org.apache.tez.common.counters.DAGCounter:

INFO : NUM_SUCCEEDED_TASKS: 16

INFO : TOTAL_LAUNCHED_TASKS: 16

INFO : AM_CPU_MILLISECONDS: 4170

INFO : AM_GC_TIME_MILLIS: 107

INFO : File System Counters:

INFO : FILE_BYTES_READ: 743941

INFO : FILE_BYTES_WRITTEN: 30626

INFO : HDFS_BYTES_READ: 1485532

INFO : HDFS_BYTES_WRITTEN: 8110

INFO : HDFS_READ_OPS: 69

INFO : HDFS_WRITE_OPS: 2

INFO : HDFS_OP_CREATE: 1

INFO : HDFS_OP_GET_FILE_STATUS: 3

INFO : HDFS_OP_OPEN: 66

INFO : HDFS_OP_RENAME: 1

INFO : org.apache.tez.common.counters.TaskCounter:

INFO : REDUCE_INPUT_GROUPS: 304

INFO : REDUCE_INPUT_RECORDS: 505

INFO : COMBINE_INPUT_RECORDS: 0

INFO : SPILLED_RECORDS: 1010

INFO : NUM_SHUFFLED_INPUTS: 183

INFO : NUM_SKIPPED_INPUTS: 53

INFO : NUM_FAILED_SHUFFLE_INPUTS: 0

INFO : MERGED_MAP_OUTPUTS: 179

INFO : GC_TIME_MILLIS: 874

INFO : TASK_DURATION_MILLIS: 11696

INFO : CPU_MILLISECONDS: 15160

INFO : PHYSICAL_MEMORY_BYTES: 11792285696

INFO : VIRTUAL_MEMORY_BYTES: 42995609600

INFO : COMMITTED_HEAP_BYTES: 11792285696

INFO : INPUT_RECORDS_PROCESSED: 1328

INFO : INPUT_SPLIT_LENGTH_BYTES: 8391743

INFO : OUTPUT_RECORDS: 1029

INFO : OUTPUT_LARGE_RECORDS: 0

INFO : OUTPUT_BYTES: 32266

INFO : OUTPUT_BYTES_WITH_OVERHEAD: 35410

INFO : OUTPUT_BYTES_PHYSICAL: 24938

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : ADDITIONAL_SPILLS_BYTES_READ: 18270

INFO : ADDITIONAL_SPILL_COUNT: 0

INFO : SHUFFLE_CHUNK_COUNT: 13

INFO : SHUFFLE_BYTES: 31510

INFO : SHUFFLE_BYTES_DECOMPRESSED: 52246

INFO : SHUFFLE_BYTES_TO_MEM: 0

INFO : SHUFFLE_BYTES_TO_DISK: 0

INFO : SHUFFLE_BYTES_DISK_DIRECT: 31510

INFO : NUM_MEM_TO_DISK_MERGES: 0

INFO : NUM_DISK_TO_DISK_MERGES: 0

INFO : SHUFFLE_PHASE_TIME: 1167

INFO : MERGE_PHASE_TIME: 871

INFO : FIRST_EVENT_RECEIVED: 468

INFO : LAST_EVENT_RECEIVED: 695

INFO : HIVE:

INFO : CREATED_FILES: 1

INFO : DESERIALIZE_ERRORS: 0

INFO : RECORDS_IN_Map_1: 173311

INFO : RECORDS_IN_Map_4: 26000

INFO : RECORDS_IN_Map_5: 73049

INFO : RECORDS_OUT_0: 100

INFO : RECORDS_OUT_INTERMEDIATE_Map_1: 504

INFO : RECORDS_OUT_INTERMEDIATE_Map_4: 494

INFO : RECORDS_OUT_INTERMEDIATE_Map_5: 30

INFO : RECORDS_OUT_INTERMEDIATE_Reducer_2: 566

INFO : RECORDS_OUT_INTERMEDIATE_Reducer_3: 0

INFO : RECORDS_OUT_OPERATOR_EVENT_63: 30

INFO : RECORDS_OUT_OPERATOR_FIL_55: 494

INFO : RECORDS_OUT_OPERATOR_FIL_58: 30

INFO : RECORDS_OUT_OPERATOR_FIL_64: 173311

INFO : RECORDS_OUT_OPERATOR_FS_75: 100

INFO : RECORDS_OUT_OPERATOR_GBY_62: 30

INFO : RECORDS_OUT_OPERATOR_GBY_68: 353

INFO : RECORDS_OUT_OPERATOR_GBY_70: 152

INFO : RECORDS_OUT_OPERATOR_LIM_74: 100

INFO : RECORDS_OUT_OPERATOR_MAPJOIN_66: 3343

INFO : RECORDS_OUT_OPERATOR_MAPJOIN_67: 3343

INFO : RECORDS_OUT_OPERATOR_MAP_0: 0

INFO : RECORDS_OUT_OPERATOR_RS_57: 494

INFO : RECORDS_OUT_OPERATOR_RS_60: 30

INFO : RECORDS_OUT_OPERATOR_RS_69: 504

INFO : RECORDS_OUT_OPERATOR_RS_72: 566

INFO : RECORDS_OUT_OPERATOR_SEL_56: 494

INFO : RECORDS_OUT_OPERATOR_SEL_59: 30

INFO : RECORDS_OUT_OPERATOR_SEL_61: 30

INFO : RECORDS_OUT_OPERATOR_SEL_65: 173311

INFO : RECORDS_OUT_OPERATOR_SEL_71: 152

INFO : RECORDS_OUT_OPERATOR_SEL_73: 152

INFO : RECORDS_OUT_OPERATOR_TS_0: 173311

INFO : RECORDS_OUT_OPERATOR_TS_3: 26000

INFO : RECORDS_OUT_OPERATOR_TS_6: 73049

INFO : Shuffle Errors:

INFO : BAD_ID: 0

INFO : CONNECTION: 0

INFO : IO_ERROR: 0

INFO : WRONG_LENGTH: 0

INFO : WRONG_MAP: 0

INFO : WRONG_REDUCE: 0

INFO : Shuffle Errors_Reducer_2_INPUT_Map_1:

INFO : BAD_ID: 0

INFO : CONNECTION: 0

INFO : IO_ERROR: 0

INFO : WRONG_LENGTH: 0

INFO : WRONG_MAP: 0

INFO : WRONG_REDUCE: 0

INFO : Shuffle Errors_Reducer_3_INPUT_Reducer_2:

INFO : BAD_ID: 0

INFO : CONNECTION: 0

INFO : IO_ERROR: 0

INFO : WRONG_LENGTH: 0

INFO : WRONG_MAP: 0

INFO : WRONG_REDUCE: 0

INFO : TaskCounter_Map_1_INPUT_Map_4:

INFO : FIRST_EVENT_RECEIVED: 158

INFO : INPUT_RECORDS_PROCESSED: 988

INFO : LAST_EVENT_RECEIVED: 158

INFO : NUM_FAILED_SHUFFLE_INPUTS: 0

INFO : NUM_SHUFFLED_INPUTS: 2

INFO : SHUFFLE_BYTES: 12930

INFO : SHUFFLE_BYTES_DECOMPRESSED: 33000

INFO : SHUFFLE_BYTES_DISK_DIRECT: 12930

INFO : SHUFFLE_BYTES_TO_DISK: 0

INFO : SHUFFLE_BYTES_TO_MEM: 0

INFO : SHUFFLE_PHASE_TIME: 262

INFO : TaskCounter_Map_1_INPUT_Map_5:

INFO : FIRST_EVENT_RECEIVED: 152

INFO : INPUT_RECORDS_PROCESSED: 60

INFO : LAST_EVENT_RECEIVED: 152

INFO : NUM_FAILED_SHUFFLE_INPUTS: 0

INFO : NUM_SHUFFLED_INPUTS: 2

INFO : SHUFFLE_BYTES: 310

INFO : SHUFFLE_BYTES_DECOMPRESSED: 672

INFO : SHUFFLE_BYTES_DISK_DIRECT: 310

INFO : SHUFFLE_BYTES_TO_DISK: 0

INFO : SHUFFLE_BYTES_TO_MEM: 0

INFO : SHUFFLE_PHASE_TIME: 171

INFO : TaskCounter_Map_1_INPUT_store_sales:

INFO : INPUT_RECORDS_PROCESSED: 182

INFO : INPUT_SPLIT_LENGTH_BYTES: 6189101

INFO : TaskCounter_Map_1_OUTPUT_Reducer_2:

INFO : ADDITIONAL_SPILLS_BYTES_READ: 0

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : ADDITIONAL_SPILL_COUNT: 0

INFO : OUTPUT_BYTES: 10681

INFO : OUTPUT_BYTES_PHYSICAL: 13710

INFO : OUTPUT_BYTES_WITH_OVERHEAD: 12401

INFO : OUTPUT_LARGE_RECORDS: 0

INFO : OUTPUT_RECORDS: 353

INFO : SHUFFLE_CHUNK_COUNT: 3

INFO : SPILLED_RECORDS: 353

INFO : TaskCounter_Map_4_INPUT_item:

INFO : INPUT_RECORDS_PROCESSED: 26

INFO : INPUT_SPLIT_LENGTH_BYTES: 2019396

INFO : TaskCounter_Map_4_OUTPUT_Map_1:

INFO : ADDITIONAL_SPILLS_BYTES_READ: 0

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : ADDITIONAL_SPILL_COUNT: 0

INFO : OUTPUT_BYTES: 15506

INFO : OUTPUT_BYTES_PHYSICAL: 6489

INFO : OUTPUT_BYTES_WITH_OVERHEAD: 16500

INFO : OUTPUT_LARGE_RECORDS: 0

INFO : OUTPUT_RECORDS: 494

INFO : SPILLED_RECORDS: 0

INFO : TaskCounter_Map_5_INPUT_date_dim:

INFO : INPUT_RECORDS_PROCESSED: 72

INFO : INPUT_SPLIT_LENGTH_BYTES: 183246

INFO : TaskCounter_Map_5_OUTPUT_Map_1:

INFO : ADDITIONAL_SPILLS_BYTES_READ: 0

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : ADDITIONAL_SPILL_COUNT: 0

INFO : OUTPUT_BYTES: 270

INFO : OUTPUT_BYTES_PHYSICAL: 179

INFO : OUTPUT_BYTES_WITH_OVERHEAD: 336

INFO : OUTPUT_LARGE_RECORDS: 0

INFO : OUTPUT_RECORDS: 30

INFO : SPILLED_RECORDS: 0

INFO : TaskCounter_Reducer_2_INPUT_Map_1:

INFO : ADDITIONAL_SPILLS_BYTES_READ: 13710

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : COMBINE_INPUT_RECORDS: 0

INFO : FIRST_EVENT_RECEIVED: 155

INFO : LAST_EVENT_RECEIVED: 310

INFO : MERGED_MAP_OUTPUTS: 169

INFO : MERGE_PHASE_TIME: 788

INFO : NUM_DISK_TO_DISK_MERGES: 0

INFO : NUM_FAILED_SHUFFLE_INPUTS: 0

INFO : NUM_MEM_TO_DISK_MERGES: 0

INFO : NUM_SHUFFLED_INPUTS: 169

INFO : NUM_SKIPPED_INPUTS: 53

INFO : REDUCE_INPUT_GROUPS: 152

INFO : REDUCE_INPUT_RECORDS: 353

INFO : SHUFFLE_BYTES: 13710

INFO : SHUFFLE_BYTES_DECOMPRESSED: 12401

INFO : SHUFFLE_BYTES_DISK_DIRECT: 13710

INFO : SHUFFLE_BYTES_TO_DISK: 0

INFO : SHUFFLE_BYTES_TO_MEM: 0

INFO : SHUFFLE_PHASE_TIME: 654

INFO : SPILLED_RECORDS: 353

INFO : TaskCounter_Reducer_2_OUTPUT_Reducer_3:

INFO : ADDITIONAL_SPILLS_BYTES_READ: 0

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : ADDITIONAL_SPILL_COUNT: 0

INFO : OUTPUT_BYTES: 5809

INFO : OUTPUT_BYTES_PHYSICAL: 4560

INFO : OUTPUT_BYTES_WITH_OVERHEAD: 6173

INFO : OUTPUT_LARGE_RECORDS: 0

INFO : OUTPUT_RECORDS: 152

INFO : SHUFFLE_CHUNK_COUNT: 10

INFO : SPILLED_RECORDS: 152

INFO : TaskCounter_Reducer_3_INPUT_Reducer_2:

INFO : ADDITIONAL_SPILLS_BYTES_READ: 4560

INFO : ADDITIONAL_SPILLS_BYTES_WRITTEN: 0

INFO : COMBINE_INPUT_RECORDS: 0

INFO : FIRST_EVENT_RECEIVED: 3

INFO : LAST_EVENT_RECEIVED: 75

INFO : MERGED_MAP_OUTPUTS: 10

INFO : MERGE_PHASE_TIME: 83

INFO : NUM_DISK_TO_DISK_MERGES: 0

INFO : NUM_FAILED_SHUFFLE_INPUTS: 0

INFO : NUM_MEM_TO_DISK_MERGES: 0

INFO : NUM_SHUFFLED_INPUTS: 10

INFO : NUM_SKIPPED_INPUTS: 0

INFO : REDUCE_INPUT_GROUPS: 152

INFO : REDUCE_INPUT_RECORDS: 152

INFO : SHUFFLE_BYTES: 4560

INFO : SHUFFLE_BYTES_DECOMPRESSED: 6173

INFO : SHUFFLE_BYTES_DISK_DIRECT: 4560

INFO : SHUFFLE_BYTES_TO_DISK: 0

INFO : SHUFFLE_BYTES_TO_MEM: 0

INFO : SHUFFLE_PHASE_TIME: 80

INFO : SPILLED_RECORDS: 152

INFO : TaskCounter_Reducer_3_OUTPUT_out_Reducer_3:

INFO : OUTPUT_RECORDS: 0

INFO : org.apache.hadoop.hive.ql.exec.tez.HiveInputCounters:

INFO : GROUPED_INPUT_SPLITS_Map_1: 3

INFO : GROUPED_INPUT_SPLITS_Map_4: 1

INFO : GROUPED_INPUT_SPLITS_Map_5: 1

INFO : INPUT_DIRECTORIES_Map_1: 30

INFO : INPUT_DIRECTORIES_Map_4: 1

INFO : INPUT_DIRECTORIES_Map_5: 1

INFO : INPUT_FILES_Map_1: 30

INFO : INPUT_FILES_Map_4: 2

INFO : INPUT_FILES_Map_5: 1

INFO : RAW_INPUT_SPLITS_Map_1: 30

INFO : RAW_INPUT_SPLITS_Map_4: 2

INFO : RAW_INPUT_SPLITS_Map_5: 1

INFO : Completed executing command(queryId=hive_20220321055152_3d36d3f3-e798-41a7-a86f-8b53c6368e54); Time taken: 13.04 seconds

INFO : OK

+-----------+----------------------------------------------------+------------+

| brand_id | brand | ext_price |

+-----------+----------------------------------------------------+------------+

| 5004001 | edu packscholar #1 | 332820.50 |

| 1004001 | edu packamalg #1 | 195006.64 |

| 2002001 | importoimporto #1 | 166841.83 |

| 3004001 | edu packexporti #1 | 158652.98 |

| 1001001 | amalgamalg #1 | 150534.23 |

| 3001001 | amalgexporti #1 | 145048.85 |

| 1003001 | exportiamalg #1 | 134597.61 |

| 4003001 | exportiedu pack #1 | 127698.80 |

| 5003001 | exportischolar #1 | 125688.56 |

| 5001001 | amalgscholar #1 | 119685.78 |

| 1004002 | edu packamalg #2 | 111929.12 |

| 2003001 | exportiimporto #1 | 103567.59 |

| 4004001 | edu packedu pack #1 | 93855.63 |

| 2001001 | amalgimporto #1 | 92605.67 |

| 8001006 | amalgnameless #6 | 86524.44 |

| 4002002 | importoedu pack #2 | 85841.90 |

| 3002002 | importoexporti #2 | 82893.68 |

| 1003002 | exportiamalg #2 | 80030.22 |

| 4001001 | amalgedu pack #1 | 79673.76 |

| 3003001 | exportiexporti #1 | 77915.03 |

| 7004006 | edu packbrand #6 | 77554.59 |

| 5001002 | amalgscholar #2 | 74661.69 |

| 5003002 | exportischolar #2 | 72570.33 |

| 6001004 | amalgcorp #4 | 71518.07 |

| 2004002 | edu packimporto #2 | 66885.65 |

| 5002002 | importoscholar #2 | 64007.19 |

| 9014008 | edu packunivamalg #8 | 61489.38 |

| 8005005 | scholarnameless #5 | 59745.94 |

| 7016010 | corpnameless #10 | 56127.47 |

| 10014016 | edu packamalgamalg #16 | 54375.51 |

| 10005012 | scholarunivamalg #12 | 50576.10 |

| 7003009 | exportibrand #9 | 50322.86 |

| 6003003 | exporticorp #3 | 50107.18 |

| 7002008 | importobrand #8 | 49852.90 |

| 7007006 | brandbrand #6 | 48832.99 |

| 7012003 | importonameless #3 | 48236.41 |

| 8004007 | edu packnameless #7 | 46026.86 |

| 9012003 | importounivamalg #3 | 45438.24 |

| 9008011 | namelessmaxi #11 | 44100.40 |

| 2002002 | importoimporto #2 | 43927.21 |

| 10011008 | amalgamalgamalg #8 | 42231.16 |

| 7002001 | importobrand #1 | 41503.75 |

| 6007006 | brandcorp #6 | 41433.37 |

| 7008003 | namelessbrand #3 | 40650.72 |

| 3002001 | importoexporti #1 | 39217.90 |

| 9012005 | importounivamalg #5 | 38468.24 |

| 8011005 | amalgmaxi #5 | 37950.52 |

| 7002006 | importobrand #6 | 37163.39 |

| 2004001 | edu packimporto #1 | 36442.77 |

| 10008015 | namelessunivamalg #15 | 36189.06 |

| 7002005 | importobrand #5 | 36062.52 |

| 5002001 | importoscholar #1 | 35236.68 |

| 9008003 | namelessmaxi #3 | 35078.26 |

| 9011003 | amalgunivamalg #3 | 34439.33 |

| 8003009 | exportinameless #9 | 34262.34 |

| 8003002 | exportinameless #2 | 33857.32 |

| 7011002 | amalgnameless #2 | 33700.86 |

| 7009007 | maxibrand #7 | 33182.74 |

| 7011003 | amalgnameless #3 | 32791.63 |

| 9015003 | scholarunivamalg #3 | 32492.61 |

| 3001002 | amalgexporti #2 | 32089.98 |

| 7013009 | exportinameless #9 | 32059.71 |

| 7003002 | exportibrand #2 | 31813.89 |

| 4002001 | importoedu pack #1 | 31522.59 |

| 9010003 | univunivamalg #3 | 29897.44 |

| 6005005 | scholarcorp #5 | 29672.11 |

| 7008001 | namelessbrand #1 | 29608.17 |

| 9012009 | importounivamalg #9 | 29411.59 |

| 4004002 | edu packedu pack #2 | 29213.90 |

| 8001001 | amalgnameless #1 | 29164.40 |

| 7014004 | edu packnameless #4 | 28687.65 |

| 7015009 | scholarnameless #9 | 28234.06 |

| 6015008 | scholarbrand #8 | 28176.44 |

| 7011001 | amalgnameless #1 | 28152.18 |

| 8010006 | univmaxi #6 | 28007.82 |

| 8012007 | importomaxi #7 | 27723.13 |

| 10003017 | exportiunivamalg #17 | 27683.31 |

| 9016009 | corpunivamalg #9 | 27595.68 |

| 9009002 | maximaxi #2 | 27435.40 |

| 6014005 | edu packbrand #5 | 27142.30 |

| 7004005 | edu packbrand #5 | 27074.34 |

| 7007005 | brandbrand #5 | 27063.95 |

| 9015011 | scholarunivamalg #11 | 26888.11 |

| 8011009 | amalgmaxi #9 | 26759.66 |

| 6010003 | univbrand #3 | 26226.21 |

| 8011001 | amalgmaxi #1 | 25546.08 |

| 9002009 | importomaxi #9 | 25237.32 |

| 10016015 | corpamalgamalg #15 | 24550.05 |

| 10012001 | importoamalgamalg #1 | 24528.00 |

| 10006010 | corpunivamalg #10 | 24494.90 |

| 8007003 | brandnameless #3 | 24489.42 |

| 7008009 | namelessbrand #9 | 24158.52 |

| 4001002 | amalgedu pack #2 | 24015.28 |

| 10015004 | scholaramalgamalg #4 | 23884.39 |

| 10012011 | importoamalgamalg #11 | 23830.45 |

| 7007010 | brandbrand #10 | 23679.03 |

| 9002008 | importomaxi #8 | 23558.95 |

| 9008009 | namelessmaxi #9 | 23403.68 |

| 1001002 | amalgamalg #2 | 23381.52 |

| 10010004 | univamalgamalg #4 | 23251.29 |

+-----------+----------------------------------------------------+------------+

100 rows selected (15.279 seconds)

0: jdbc:hive2://sandbox-hdp.hortonworks.com:2> quit

. . . . . . . . . . . . . . . . . . . . . . .> ;

Error: Error while compiling statement: FAILED: ParseException line 1:0 cannot recognize input near 'quit' '<EOF>' '<EOF>' (state=42000,code=40000)

0: jdbc:hive2://sandbox-hdp.hortonworks.com:2> Closing: 0: jdbc:hive2://sandbox-hdp.hortonworks.com:2181/default;password=hive;serviceDiscoveryMode=zooKeeper;user=hive;zooKeeperNamespace=hiveserver2

-- start query 1 in stream 0 using template query55.tpl and seed 2031708268

select i_brand_id brand_id, i_brand brand,

sum(ss_ext_sales_price) ext_price

from date_dim, store_sales, item

where d_date_sk = ss_sold_date_sk

and ss_item_sk = i_item_sk

and i_manager_id=13

and d_moy=11

and d_year=1999

group by i_brand, i_brand_id

order by ext_price desc, i_brand_id

limit 100

-- end query 1 in stream 0 using template query55.tpl

Cloudera CDP

export HIVE_HOME="/root/apache-hive-3.1.2-bin"

export PATH="/root/apache-hive-3.1.2-bin/bin:$PATH"

export HADOOP_HOME="/root/hadoop-3.3.2"

export PATH="/root/hadoop-3.3.2/bin:$PATH"

export JAVA_HOME="/usr/lib/jvm/java-11-openjdk-11.0.14.1.1-2.el8_5.x86_64"

tpcds-setup.sh

HIVE="beeline -n hive -u 'jdbc:hive2://worker01:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2?tez.queue.name=default' "

DIR=hdfs://worker01/tmp/bench/$2

beeline -n hive -u 'jdbc:hive2://worker01:2181/tpcds_bin_partitioned_orc_2;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2?tez.queue.name=default' -f sample-queries-tpcds/query55.sql

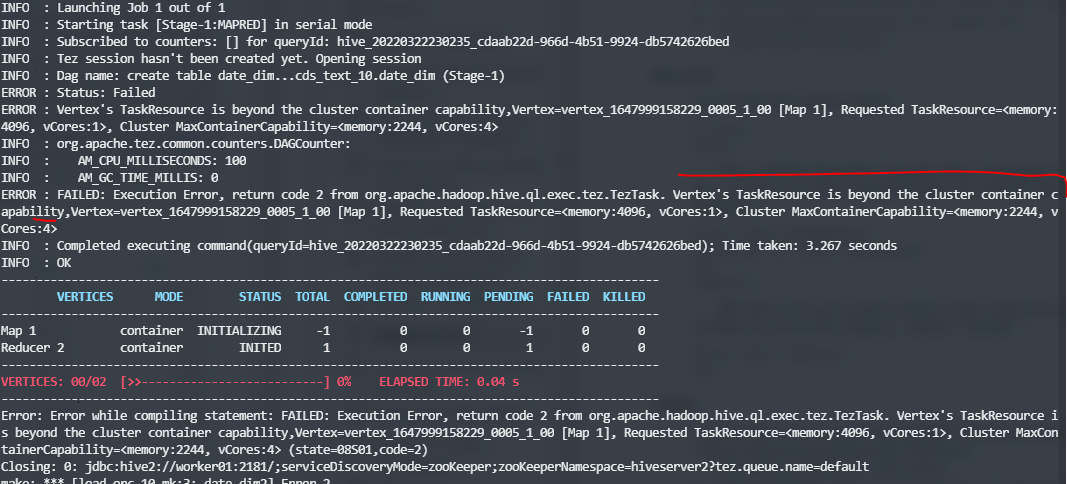

Caveats

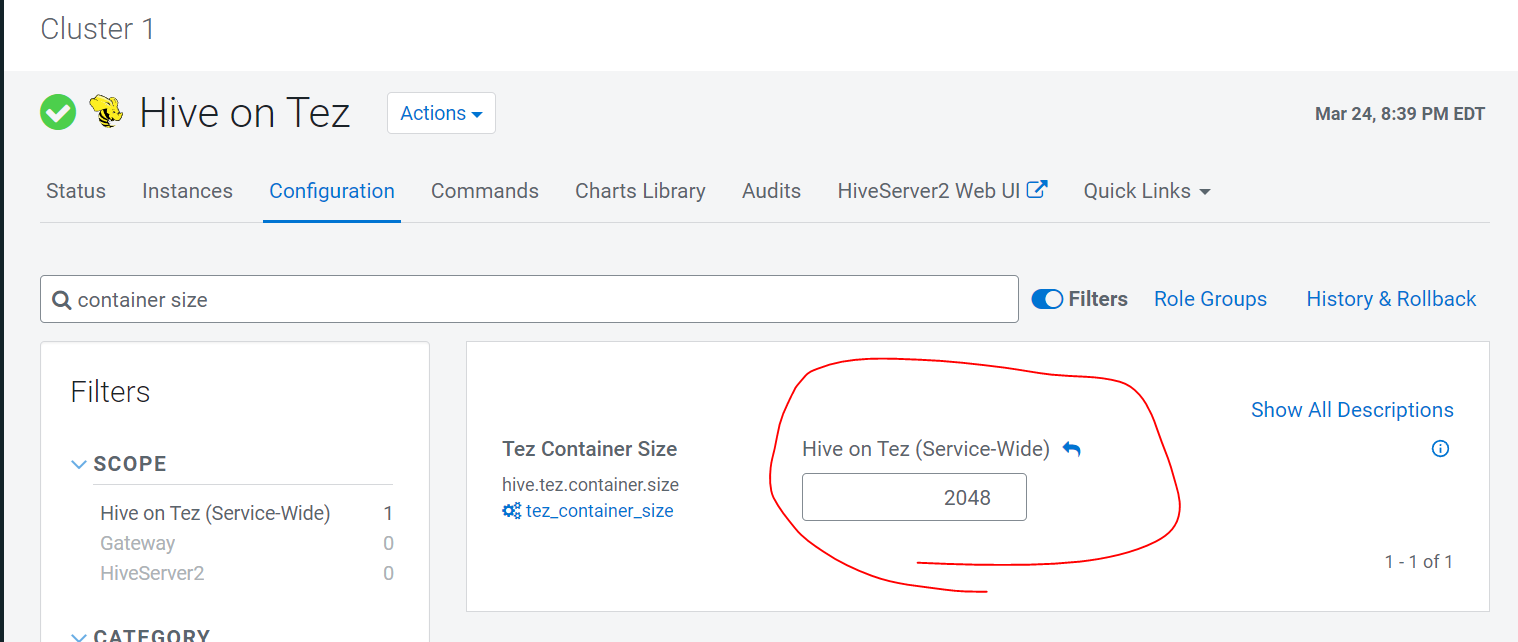

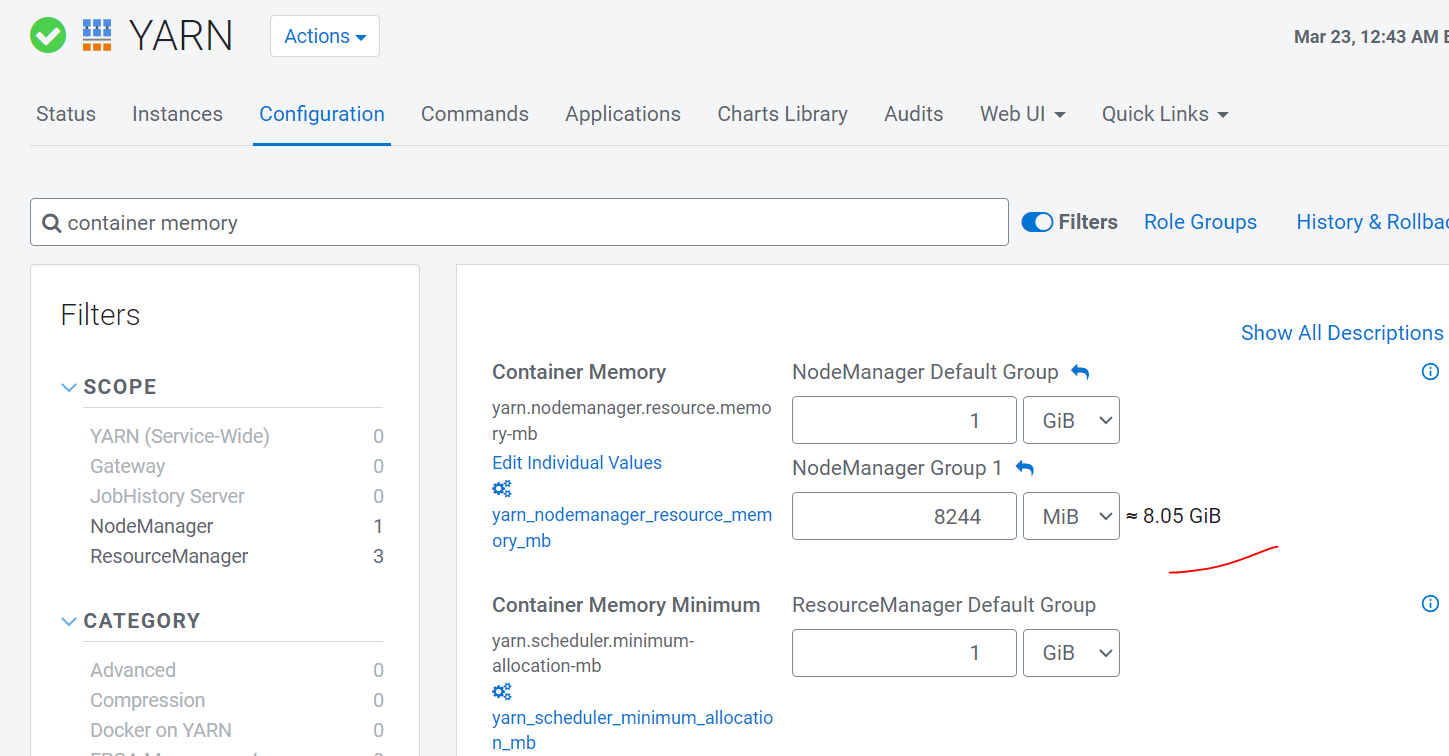

Vertex’s TaskResource is beyond the cluster container capability

https://community.cloudera.com/t5/Support-Questions/Hive-memory-error-beyond-the-cluster-container-capability/td-p/232969