https://github.com/PBWebMedia/yarn-prometheus-exporter

git clone https://github.com/PBWebMedia/yarn-prometheus-exporter.git

go get

go build -o yarn-prometheus-exporter .

export YARN_PROMETHEUS_LISTEN_ADDR=:9113

export YARN_PROMETHEUS_ENDPOINT_SCHEME=http

export YARN_PROMETHEUS_ENDPOINT_HOST=localhost

export YARN_PROMETHEUS_ENDPOINT_PORT=8088

export YARN_PROMETHEUS_ENDPOINT_PATH=ws/v1/cluster/metrics

using docker

docker run -p 9113:9113 pbweb/yarn-prometheus-exporter

docker-compose

services:

image: pbweb/yarn-prometheus-exporter

restart: always

environment:

- "YARN_PROMETHEUS_ENDPOINT_HOST=yarn.hadoop.lan"

ports:

- "9113:9113"

start prometheus

docker rm -f prom

docker run -dit --restart always \

--name prom -p 9090:9090 \

-v /root/prometheus/datastore:/prometheus \

-v /root/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml \

prom/prometheus

prometheus.yml

# my global config

global:

scrape_interval: 10s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

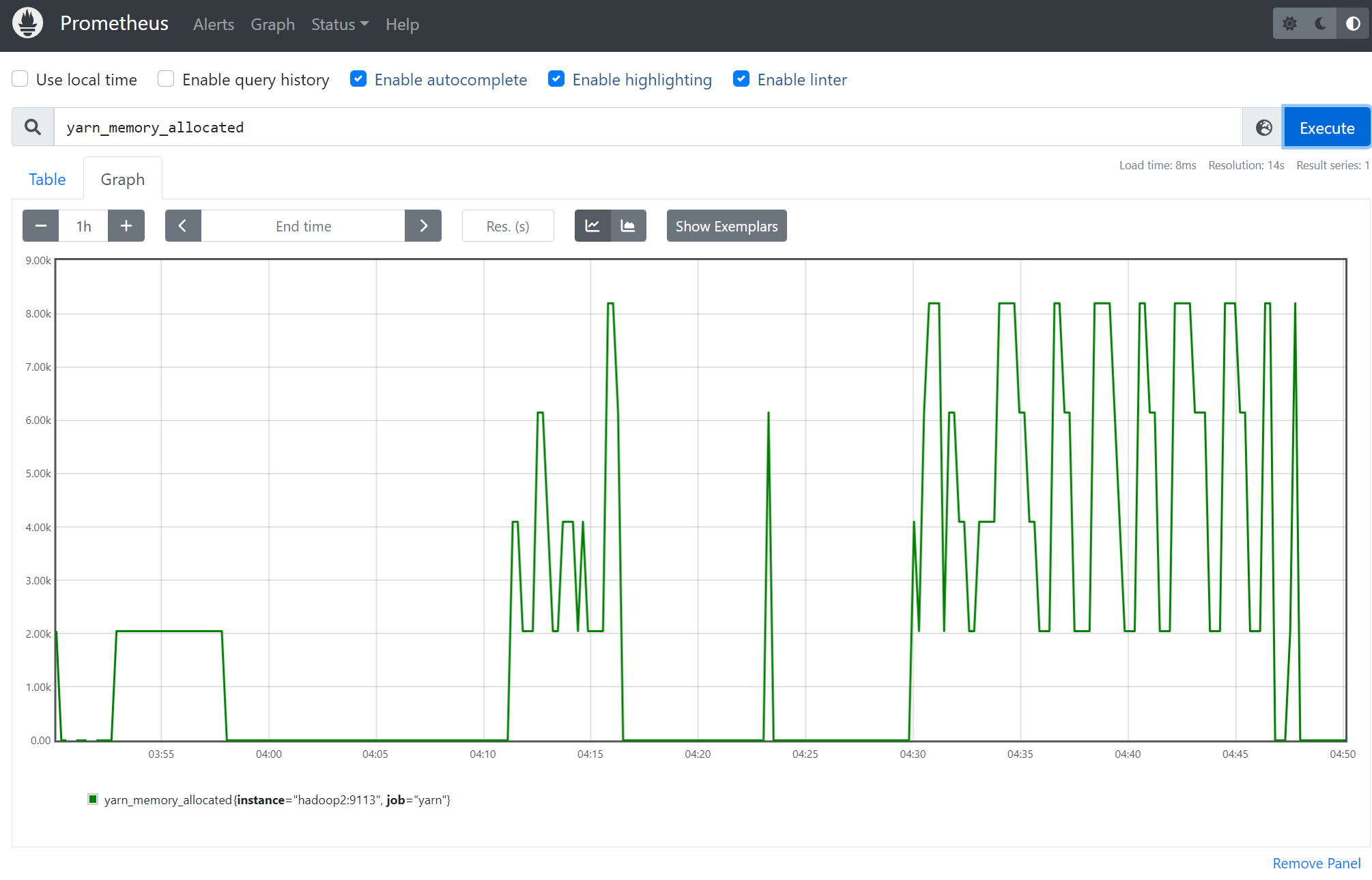

- job_name: yarn

scrape_interval: 5s

static_configs:

- targets:

- hadoop2:9113