Erasure Set

https://docs.min.io/minio/baremetal/concepts/erasure-coding.html#minio-ec-erasure-set

Erasure code protects data from multiple drives failure, unlike RAID or replication. For example, RAID6 can protect against two drive failure whereas in MinIO erasure code you can lose as many as half of drives and still the data remains safe. Further, MinIO’s erasure code is at the object level and can heal one object at a time. For RAID, healing can be done only at the volume level which translates into high downtime. As MinIO encodes each object individually, it can heal objects incrementally. Storage servers once deployed should not require drive replacement or healing for the lifetime of the server. MinIO’s erasure coded backend is designed for operational efficiency and takes full advantage of hardware acceleration whenever available.

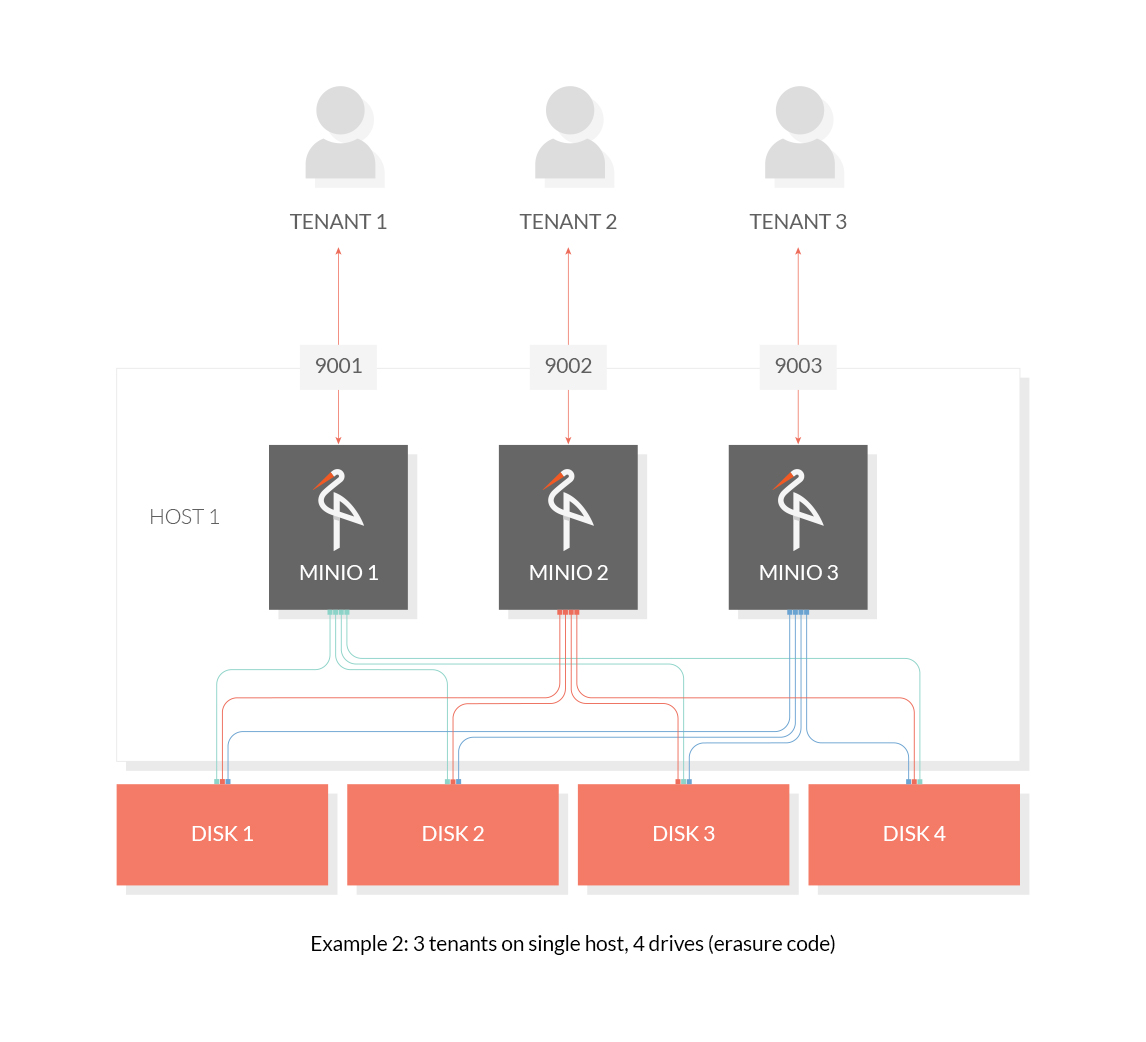

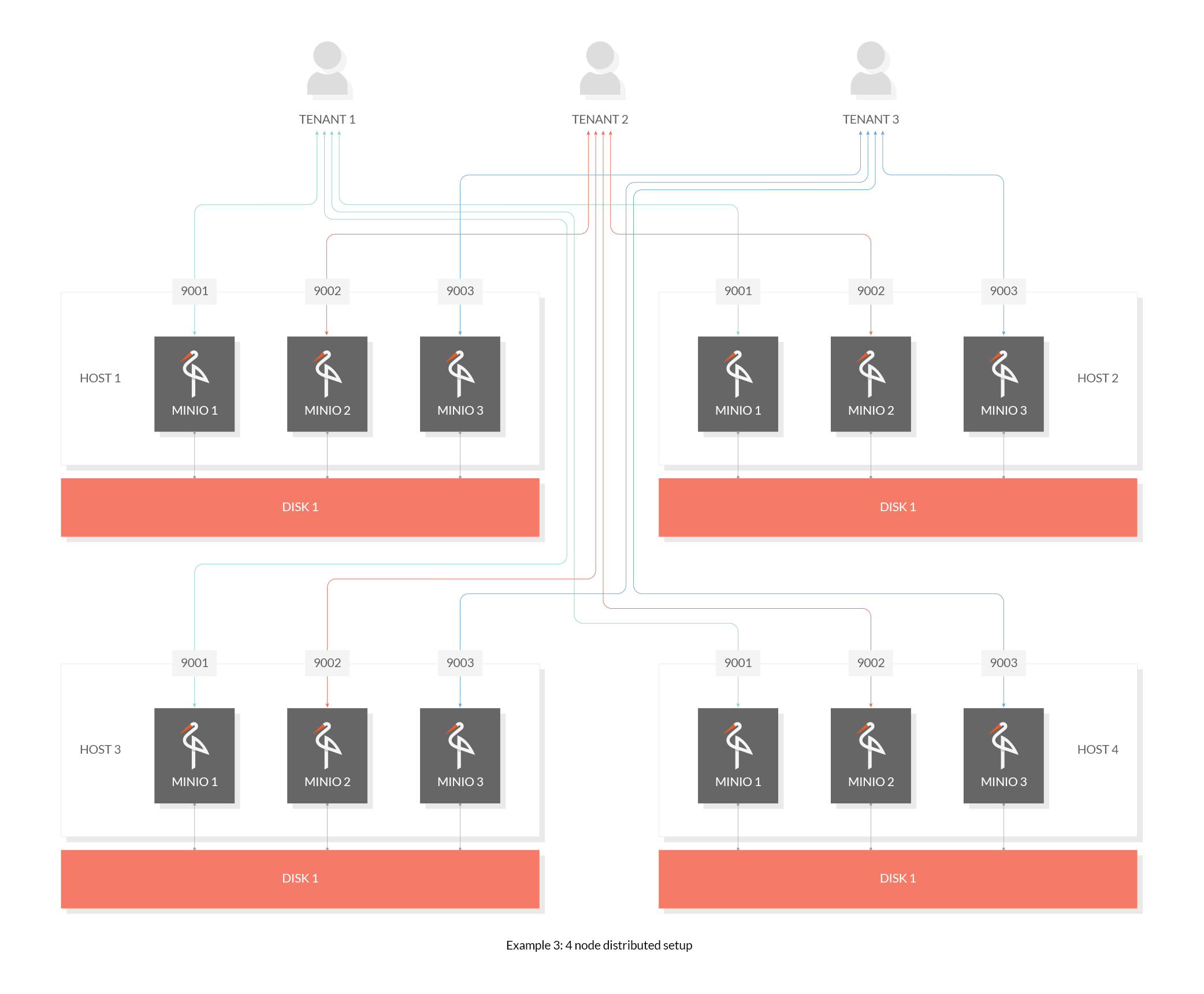

Multi-tenant Minio Deployment Guide

https://docs.min.io/docs/multi-tenant-minio-deployment-guide

cookbook/backups

mysql

mc mb m1/mysqlbkp

Bucket created successfully ‘m1/mysqlbkp’.

mc mirror --force --remove --watch mysqlbkp/ m1/mysqlbkp

mongodb

mongodump -h mongo-server1 -p 27017 -d blog-data --archive | mc pipe minio1/mongobkp/backups/mongo-blog-data-`date +%Y-%m-%d`.archive

mongodump -h mongo-server1 -p 27017 --archive | ssh user@minio-server.example.com mc pipe minio1/mongobkp/full-db-`date +%Y-%m-%d`.archive

mongodump -h mongo-server1 -p 27017 --archive | pv -brat | ssh user@minio-server.example.com mc pipe minio1/mongobkp/full-db-`date +%Y-%m-%d`.archive

mc mirror --force --remove --watch mongobkp/ minio1/mongobkp

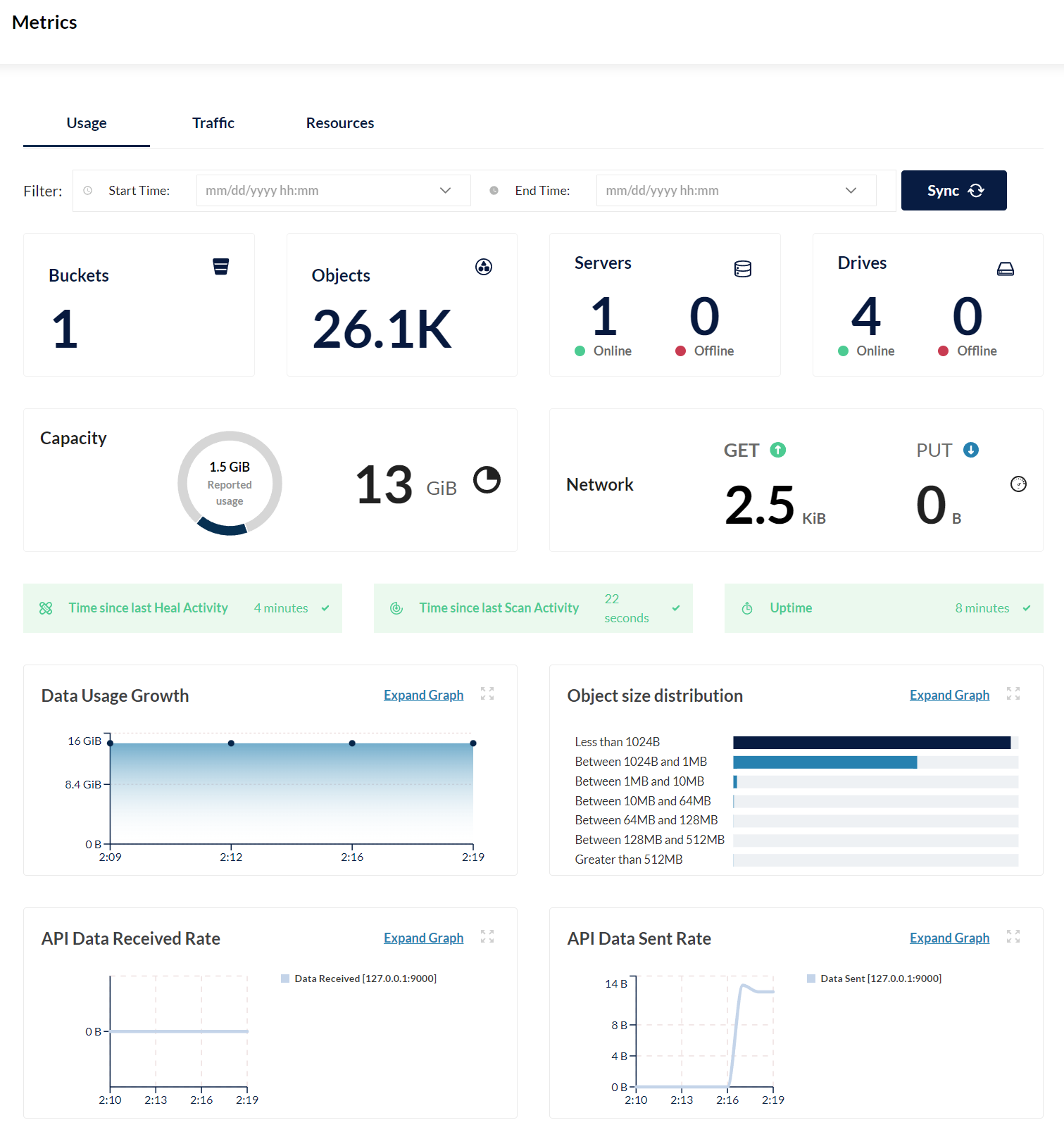

monitoring prometheus

https://docs.min.io/minio/baremetal/monitoring/metrics-alerts/collect-minio-metrics-using-prometheus.html?ref=con#minio-metrics-collect-using-prometheus

set alias

mc alias set myminio http://miniotest:9000 minio minio123

gen token

mc admin prometheus generate myminio

scrape_configs:

- job_name: minio-job

bearer_token: eyJhbGciOiJIUzUxMiIsInR5cCI6IkpXVCJ9.eyJleHAiOjQ4MDU1ODYyNzgsImlzcyI6InByb21ldGhldXMiLCJzdWIiOiJtaW5pbyJ9.TrJNtb86dodsUkIO_hSnjqRepX5jrWqiLUZ0DgjcnphjqqhjDzo4TycHRG6sSTjWOyxoKq2_d98uZokUGuFpEw

metrics_path: /minio/v2/metrics/cluster

scheme: http

static_configs:

- targets: ['miniotest:9000']

mc client admin

https://docs.min.io/docs/minio-admin-complete-guide.html

wget https://dl.min.io/client/mc/release/linux-amd64/mc

dummy single standalone test

docker run -dit \

-p 9000:9000 \

-p 9001:9001 \

--name minio \

-v /mnt/data:/data1 \

-e MINIO_ROOT_USER=minio \

-e MINIO_ROOT_PASSWORD=minio123 \

quay.io/minio/minio server /data1 --console-address ":9001"

minio gateway for s3

https://docs.min.io/docs/minio-gateway-for-s3.html

IAM profile based credentials. (performs an HTTP call to a pre-defined endpoint, only valid inside configured ec2 instances)

docker run -dit \

-p 8000:9000 \

--name minios3 \

-e MINIO_ROOT_USER=minio \

-e MINIO_ROOT_PASSWORD=minio123 \

quay.io/minio/minio gateway s3

mc alias set s32 http://localhost:8000 minio minio123

quickstart

https://docs.min.io/docs/minio-erasure-code-quickstart-guide.html

podman run \

-p 9000:9000 \

-p 9001:9001 \

--name minio \

-v /mnt/data1:/data1 \

-v /mnt/data2:/data2 \

-v /mnt/data3:/data3 \

-v /mnt/data4:/data4 \

-v /mnt/data5:/data5 \

-v /mnt/data6:/data6 \

-v /mnt/data7:/data7 \

-v /mnt/data8:/data8 \

quay.io/minio/minio server /data{1...8} --console-address ":9001"

github.com/minio/minio/blob/master/docs/gateway/nas.md

podman run \

-p 9000:9000 \

-p 9001:9001 \

--name nas-s3 \

-e "MINIO_ROOT_USER=minio" \

-e "MINIO_ROOT_PASSWORD=minio123" \

-v /shared/nasvol:/container/vol \

quay.io/minio/minio gateway nas /container/vol --console-address ":9001"

my test

docker run \

-p 9000:9000 \

-p 9001:9001 \

--name minio \

-v /mnt/data1:/data1 \

-v /mnt/data2:/data2 \

-v /mnt/data3:/data3 \

-v /mnt/data4:/data4 \

-e MINIO_ROOT_USER=minio \

-e MINIO_ROOT_PASSWORD=minio123 \

-e MINIO_PROMETHEUS_AUTH_TYPE=public \

-e MINIO_PROMETHEUS_URL="http://monit:9090/"

quay.io/minio/minio server /data{1...4} --console-address ":9001"

docker run \

-p 9000:9000 \

-p 9001:9001 \

--name minio \

-v /mnt/data1:/data1 \

-v /mnt/data2:/data2 \

-v /mnt/datatemp3:/data3 \

-v /mnt/datatemp4:/data4 \

-e MINIO_ROOT_USER=minio \

-e MINIO_ROOT_PASSWORD=minio123 \

-e MINIO_PROMETHEUS_AUTH_TYPE=public \

quay.io/minio/minio server /data{1...4} --console-address ":9001"

my test2

docker run \

-p 3000:9000 \

-p 3001:9001 \

--name minio \

-v /mnt/data-3PJS:/data1 \

-e MINIO_ROOT_USER=minio \

-e MINIO_ROOT_PASSWORD=minio123 \

-e MINIO_PROMETHEUS_AUTH_TYPE=public \

quay.io/minio/minio server /data1 --console-address ":9001"

-e MINIO_PROMETHEUS_URL=”http://monit:9090/” \

Scripts

Python custom upload

import os

from minio import Minio

from minio.error import S3Error

import time

import traceback

client = Minio(

"localhost:3000",

"minioadmin",

"minioadmin",

secure=False,

)

folder = "/mnt/dtoricoc3/completed"

#def loop(folder):

def upload(bucket, objname, fpath):

while True:

try:

client.fput_object(

bucket, objname, fpath

)

#os.remove(fpath)

break

except:

traceback.print_exc()

time.sleep(3)

pass

def listfiles(_folder):

ret = []

for filename in os.listdir(_folder):

filepath = f"{_folder}/{filename}"

if os.path.isfile(filepath):

ret.append(filepath)

elif os.path.isdir(filepath):

ret.extend(listfiles(filepath))

return ret

allfiles = listfiles(folder)

print("files", len(allfiles))

def parallel(func, data, nthread=4):

from multiprocessing import Pool

from contextlib import closing

rs = []

with closing(Pool(nthread)) as p:

rs = p.map(func, data)

return rs

def worker(filename):

key = "j" + filename.replace(folder, "")

bucket = "torrent"

print(filename, "to ->", key)

upload(bucket, key, filename)

parallel(worker, allfiles, 4)

mount s3-fuse

github.com/s3fs-fuse/s3fs-fuse

yum -y install s3fs-fuse

echo ACCESS_KEY_ID:SECRET_ACCESS_KEY > ${HOME}/.passwd-s3fs

chmod 600 ${HOME}/.passwd-s3fs

/etc/fstab

mybucket /path/to/mountpoint fuse.s3fs passwd_file=/etc/s3fs,_netdev,allow_other,use_path_request_style,url=https://url.to.s3/ 0 0

Appendix

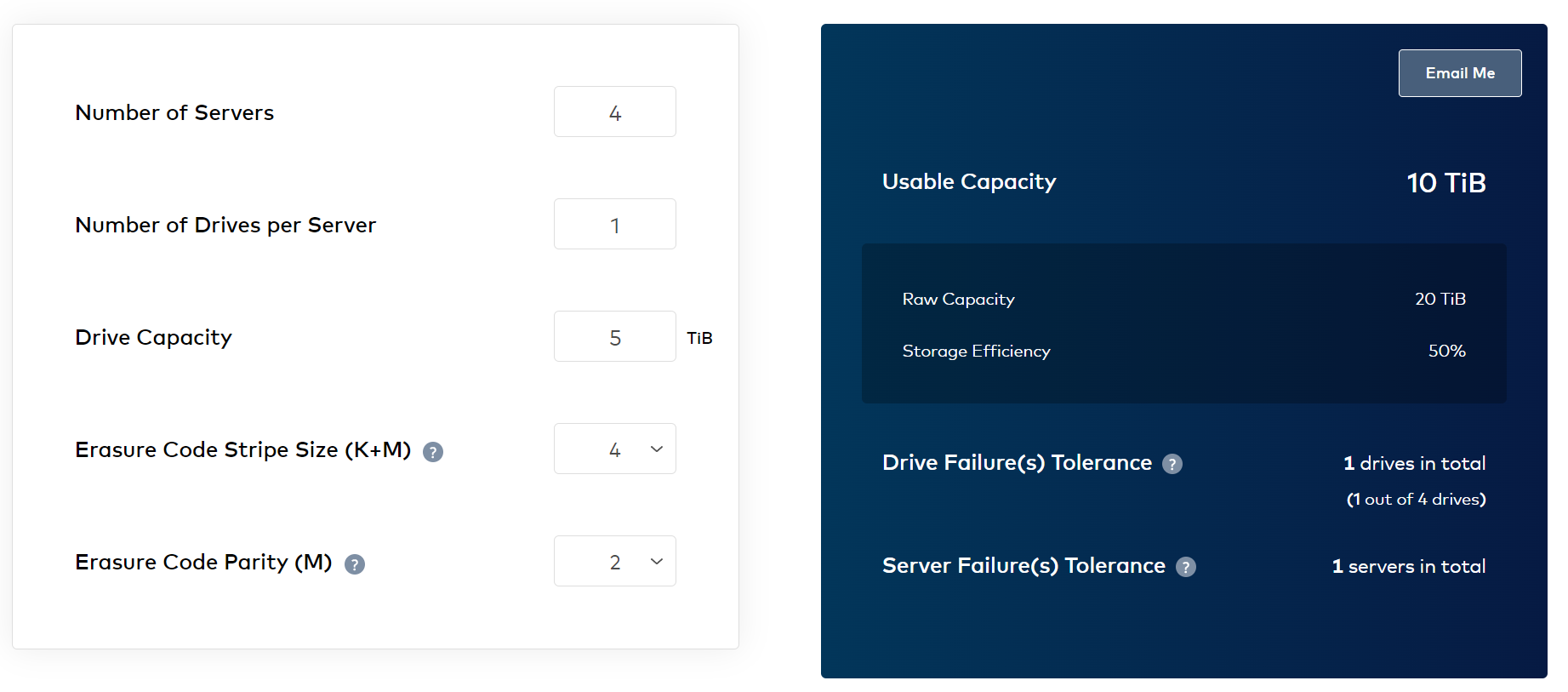

Erasure calculator

https://min.io/product/erasure-code-calculator?utm_term=minio%20calculator&utm_campaign=Erasure+Coding+1.4&utm_source=adwords&utm_medium=ppc&hsa_acc=8976569894&hsa_cam=13884673572&hsa_grp=127699937027&hsa_ad=533469681242&hsa_src=g&hsa_tgt=kwd-1424946992535&hsa_kw=minio%20calculator&hsa_mt=p&hsa_net=adwords&hsa_ver=3&gclid=CjwKCAjw9-KTBhBcEiwAr19ig8MGRSArHjEmLoOGVDcfN_kPhvblkcKKxCs4L0gqNcRkS5DO0E3D_RoCVFcQAvD_BwE