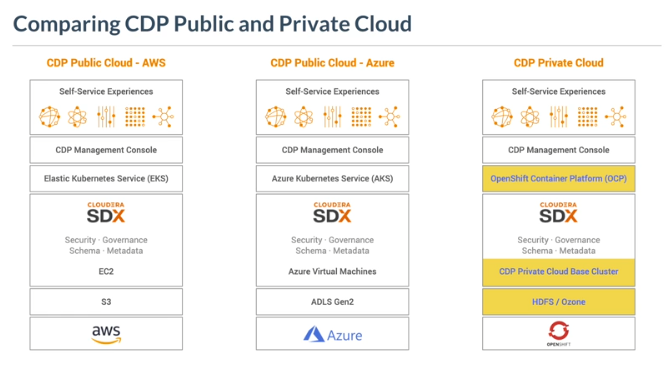

Cloudera CDP Private

https://docs.cloudera.com/cdp-private-cloud-base/7.1.6/yarn-managing-docker-containers/topics/yarn-install-docker.html

Download

https://www.cloudera.com/downloads/cdp-private-cloud-trial/cdp-private-cloud-base-trial.html

mv /etc/redhat-release /etc/redhat-release.bk

echo "CentOS Linux release 8.4 (Core)" > /etc/redhat-release

curl -O https://archive.cloudera.com/cm7/7.4.4/cloudera-manager-installer.bin

chmod u+x cloudera-manager-installer.bin

sudo ./cloudera-manager-installer.bin

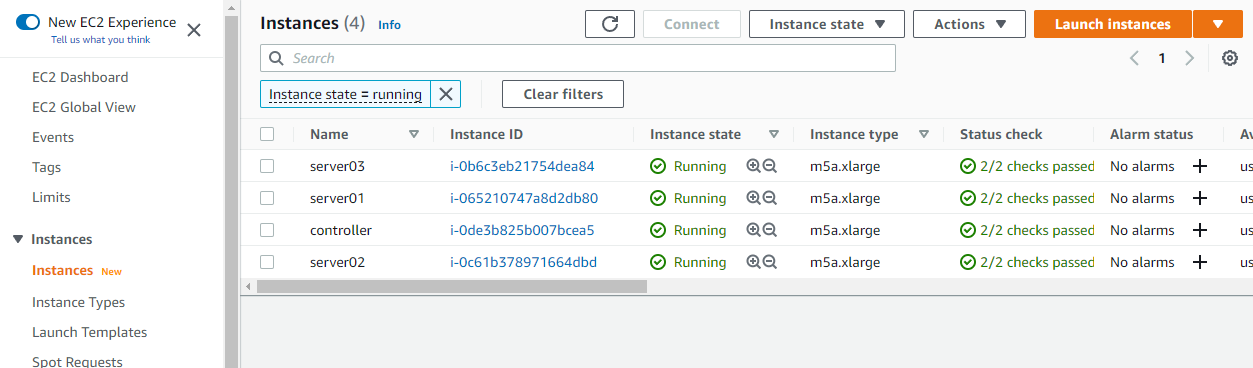

Provision

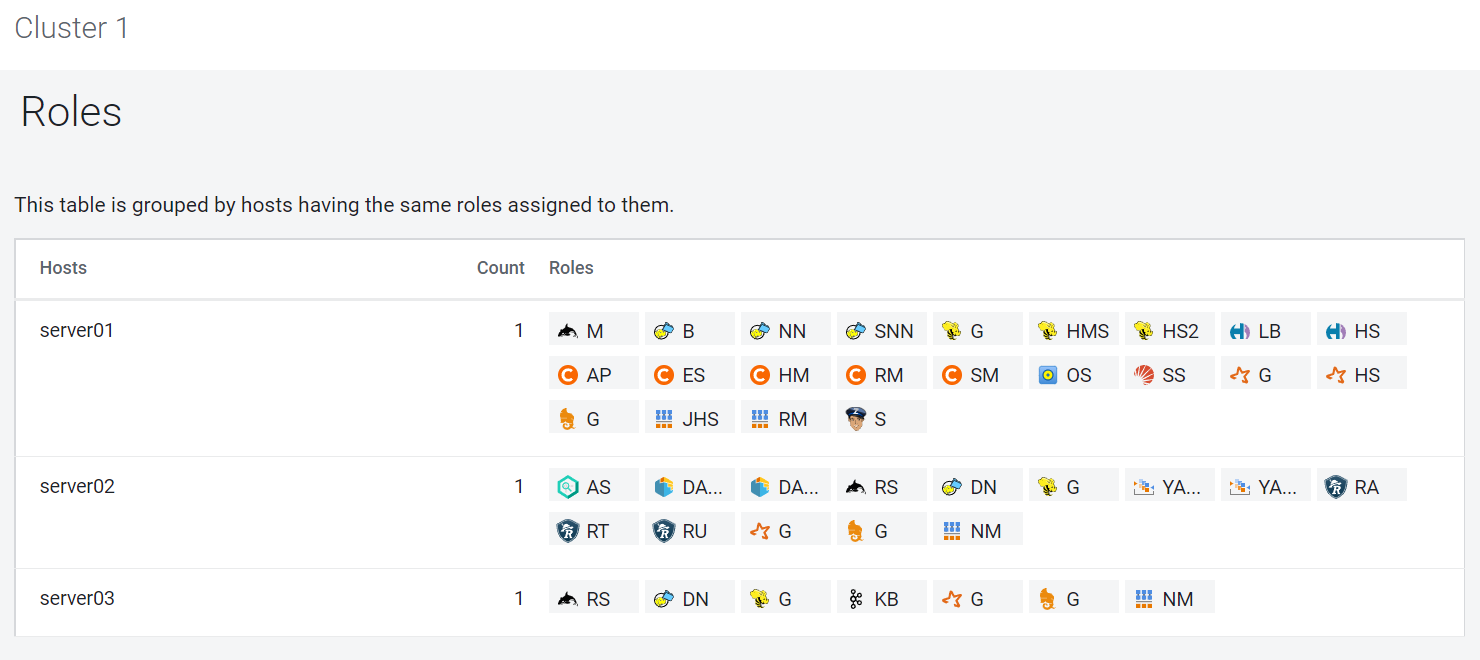

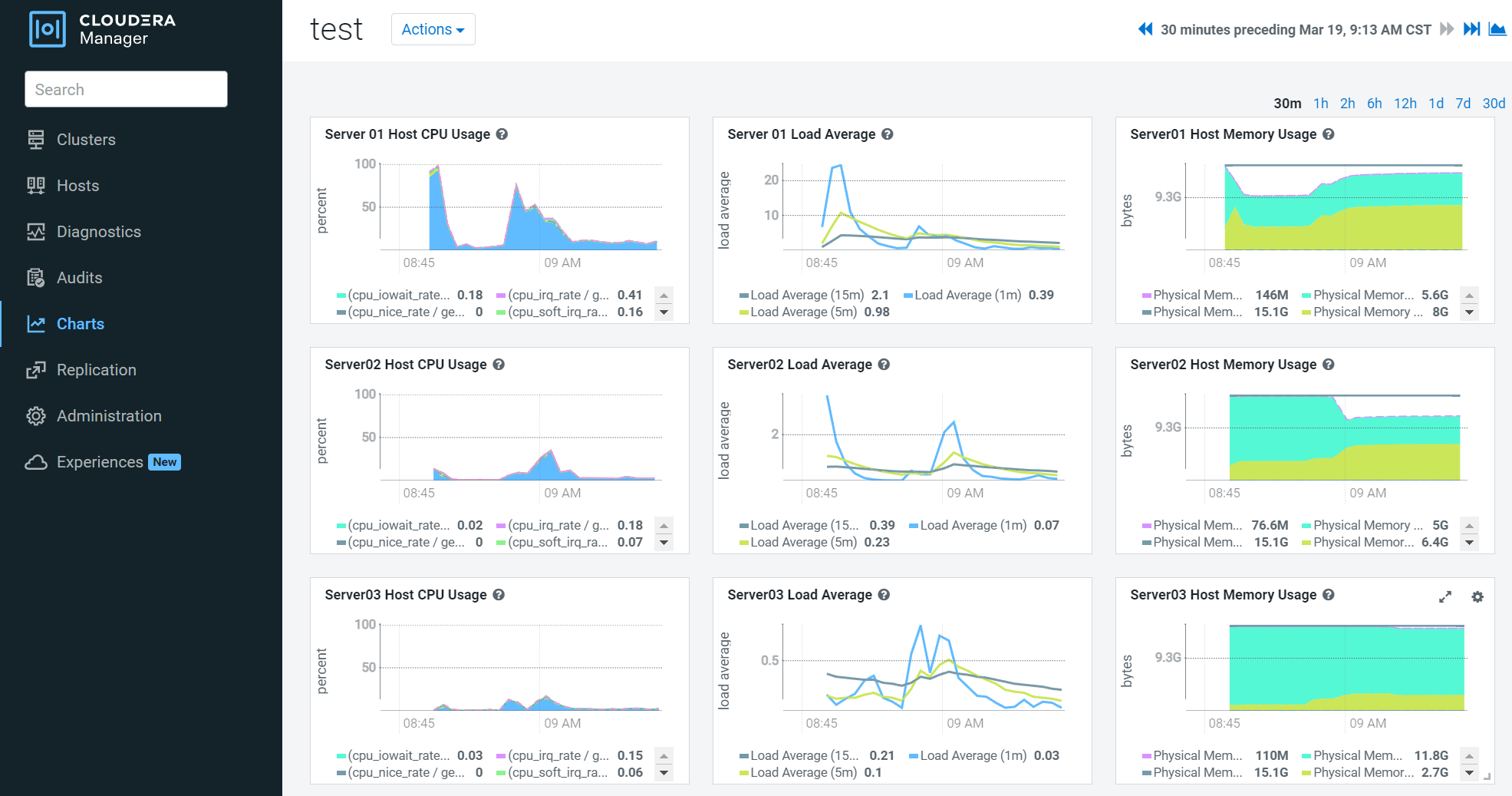

Server01

Server02

Server03

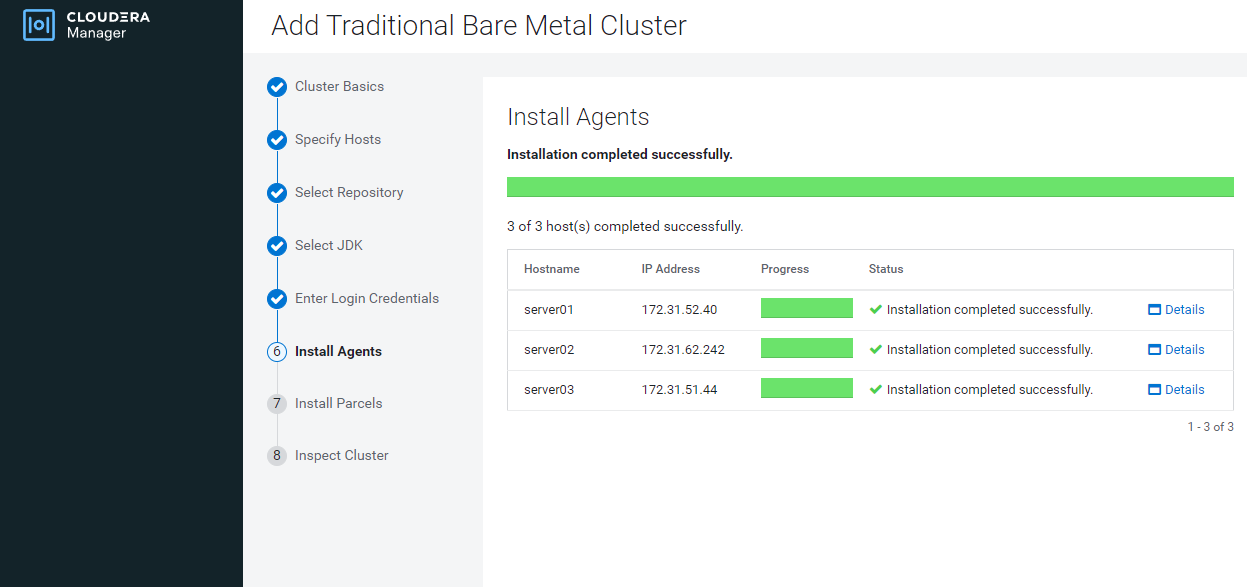

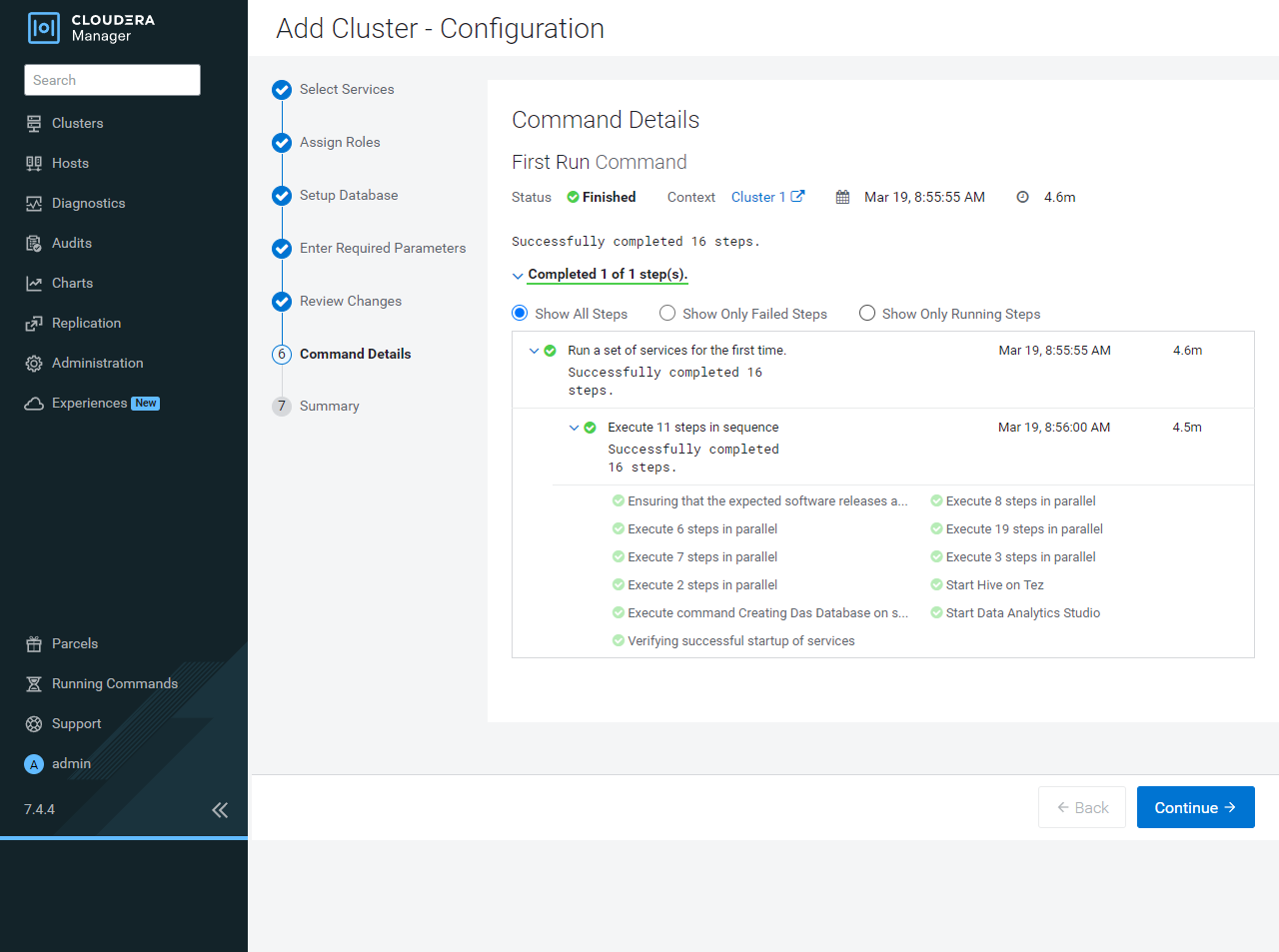

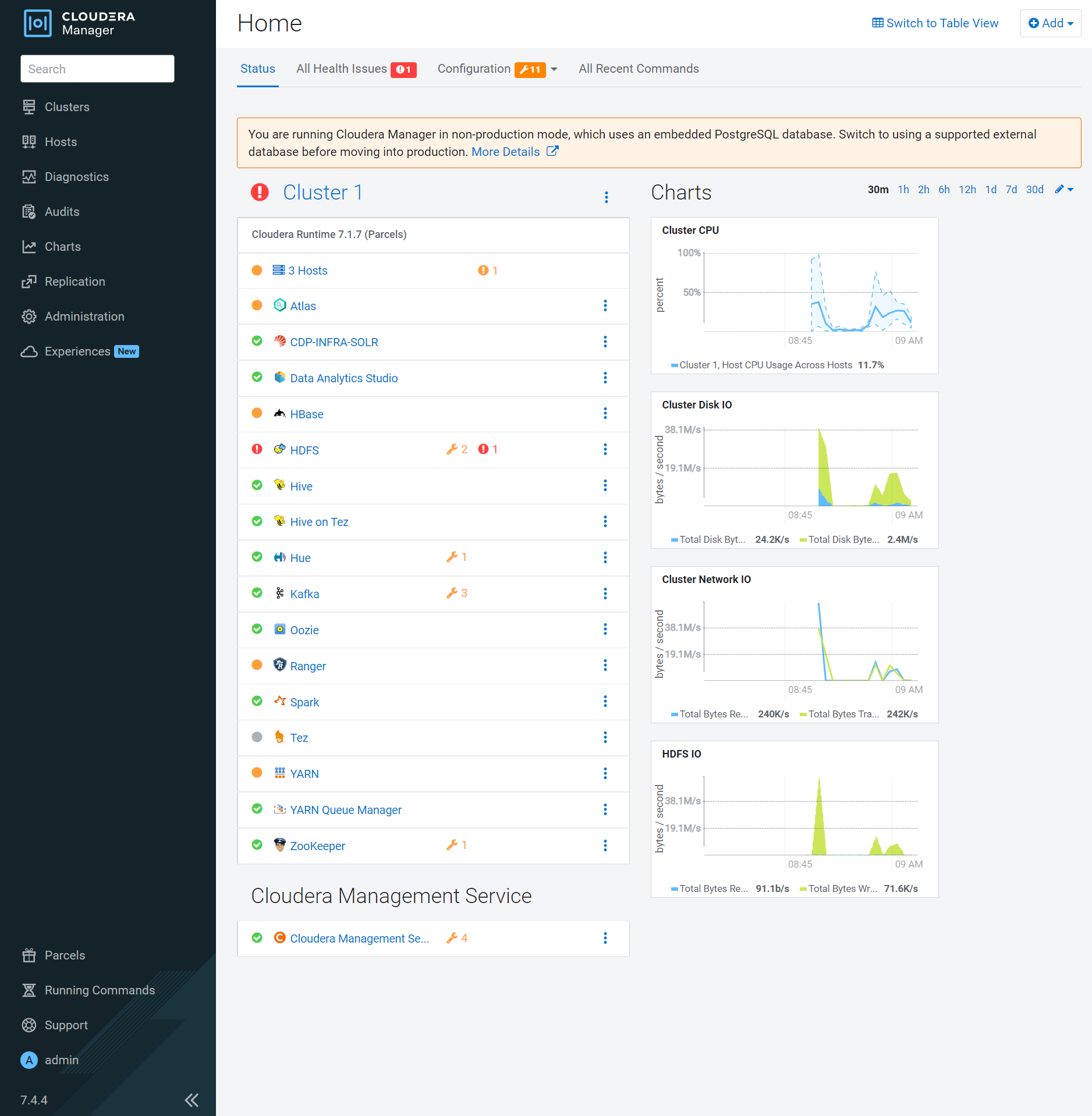

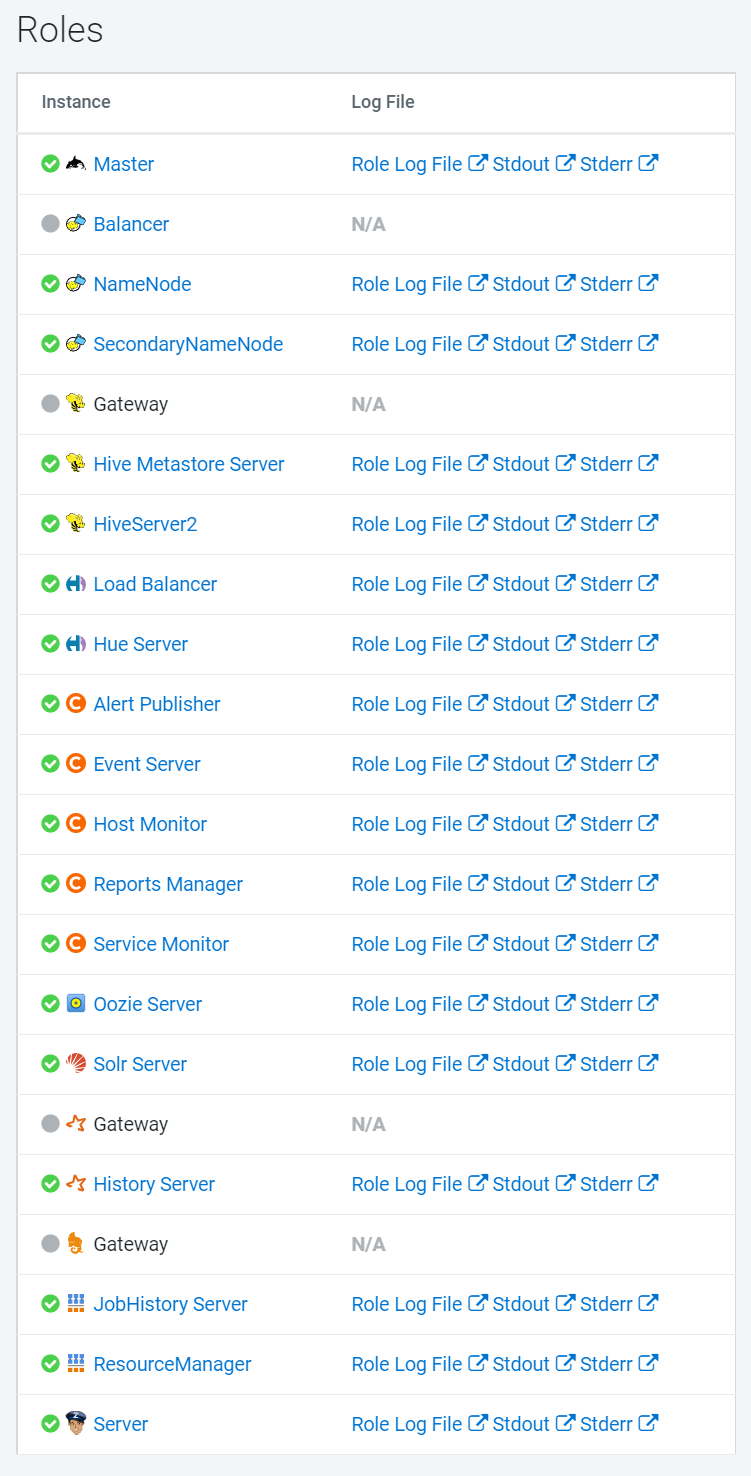

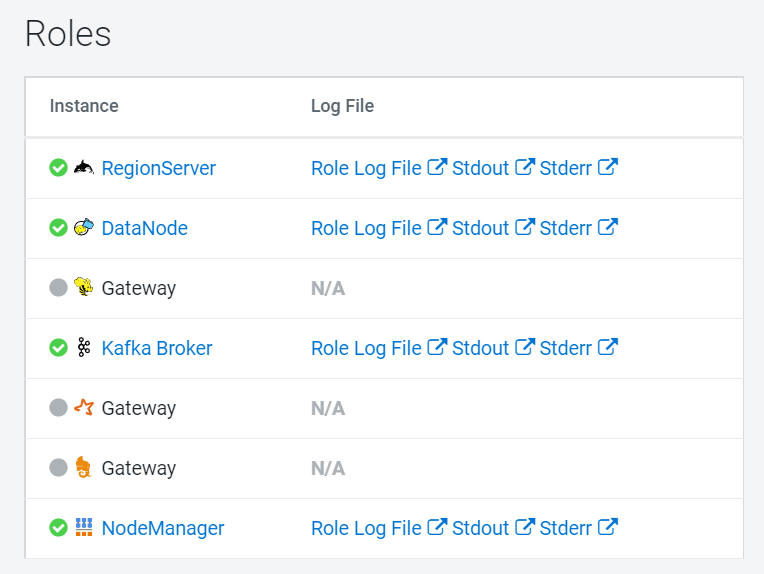

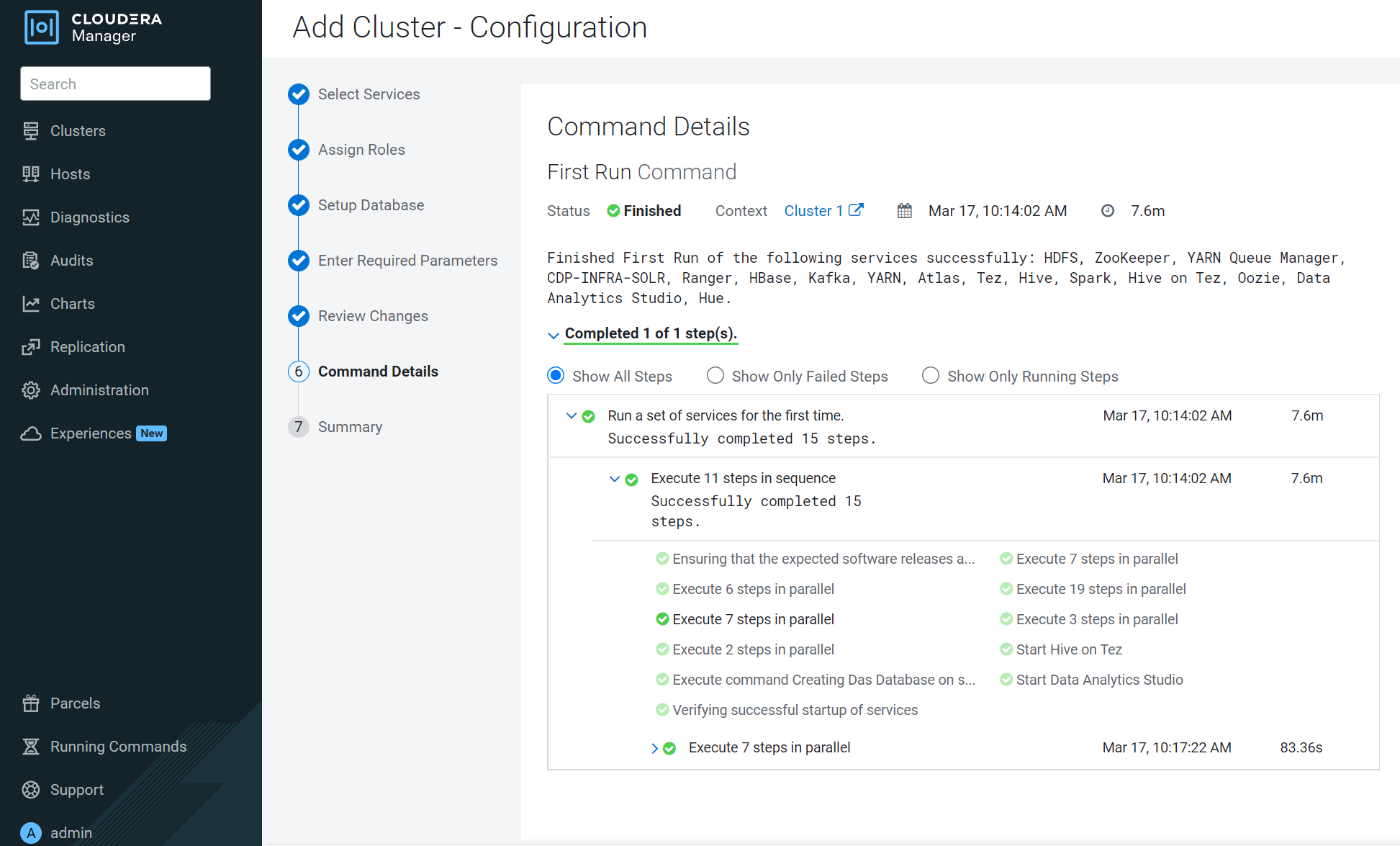

Cluster creation

Create a CDP Private Cloud Base (Trial) Development Cluster

Training

https://www.cloudera.com/about/training.html#?fq=training%3Acomplimentary%2Ffree

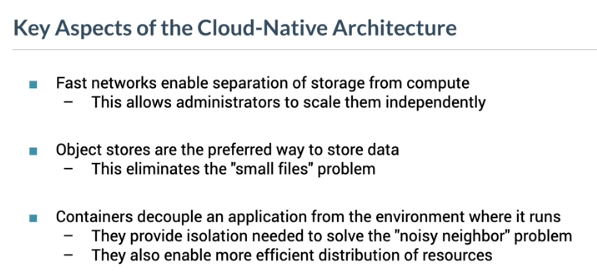

- Cloudera Essentials for CDP

- CDP Private Cloud Fundamentals

- Introduction to Cloudera Machine Learning

- Introduction to Cloudera Data Warehouse

-

CDP Private Cloud Fundamentals - Cloudera, IBM, Red Hat

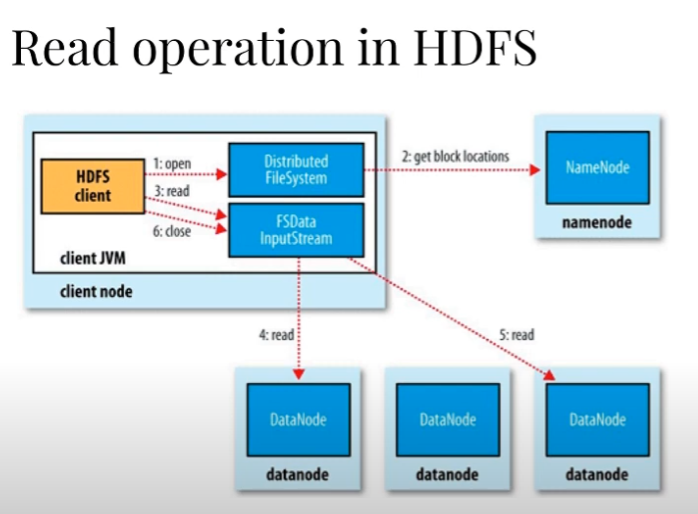

- Using HDFS

- UI (Ambari)

- CLI

- HTTP / HDFS Proxies

- Java interface

- NFS Gateway

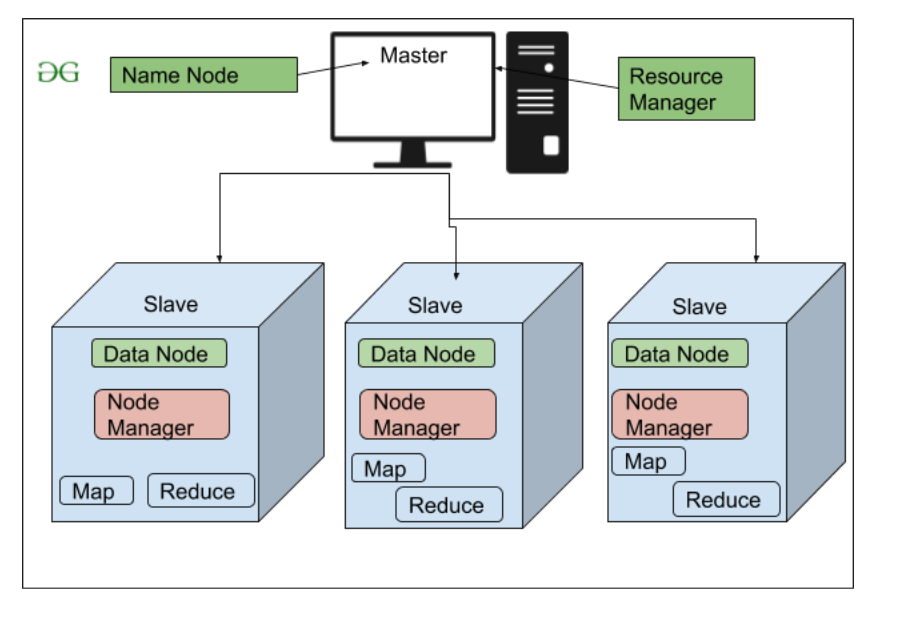

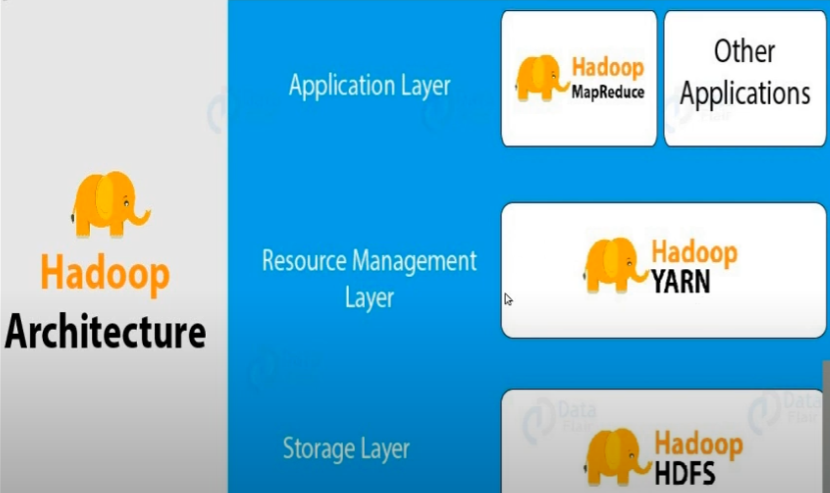

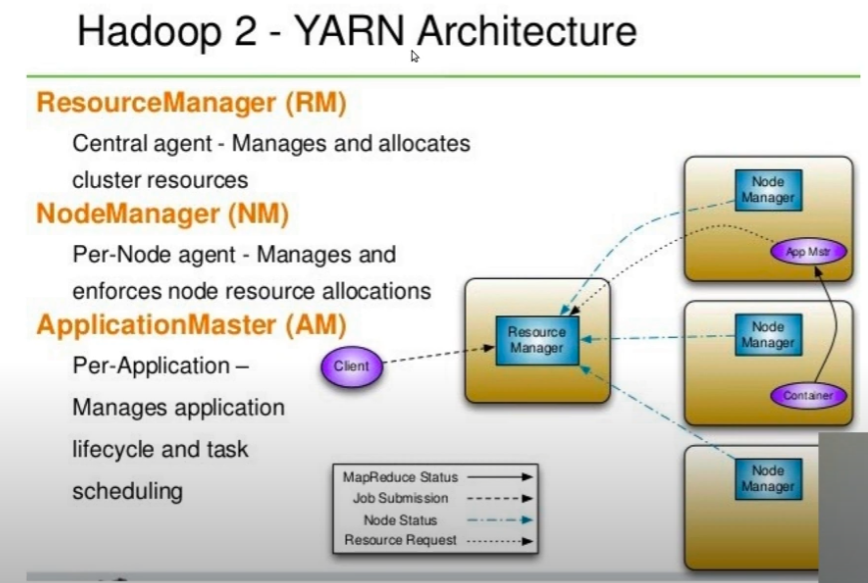

https://www.geeksforgeeks.org/hadoop-architecture/

Hadoop Architecture | Hadoop | HDFS | Map | Reduce | YARN | Big Data

https://www.youtube.com/watch?v=x87wXVZQwHM&ab_channel=TechCoreEasy

hadoop v1

HDFS Introduction | QuickStart with HDFS | HDFS Architecture | Rack Awareness | Replication in HDFS

https://www.youtube.com/watch?v=lsdaYNEqEuk

Hadoop Installation | hadoop docker |

git clone https://github.com/big-data-europe/docker-hadoop

cd docker-hadoop/

docker-compose up -d

docker container ls

docker exec -it namenode /bin/bash

hdfs commands | hadoop

https://www.youtube.com/watch?v=vg7HNfyDrGk

1. List files - ls

hdfs dfs -ls /

2. Make dir

hdfs dfs -mkdir /techcoreeasy

3. create empty file

hdfs dfs -touchz /techcoreeasy/test.txt

4. cp file from local file system to hdfs

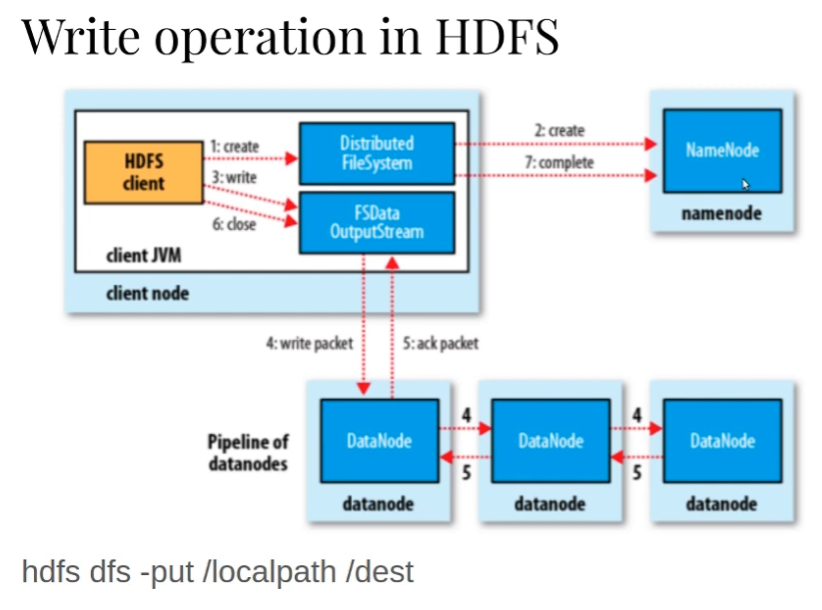

hdfs dfs -put tech.txt /techcoreeasy/

5. see contents - cat

hdfs dfs -cat /techcoreeasy/tech.txt

6. copy - cp

hdfs dfs -cp /techcoreeasy /test_cp

7. get file to local

hdfs dfs -get /techcoreeasy/test.txt .

8. Remove file

hdfs dfs -rmr /techcoreeasy/test.txt

9. stst of a file

hdfs dfs -stat /path

10. More commands and details of each command

hdfs dfs